1. Introduction

1.1. Terms of Use

In order to use this document, you are required to agree to abide by the following terms. If you do not agree with the terms, you must immediately delete or destroy this document and all its duplicated copies.

-

Copyrights and all other rights of this document shall belong to NTT DATA or third party possessing such rights.

-

This document may be reproduced, translated or adapted, in whole or in part for personal use. However, deletion of the terms given on this page and copyright notice of NTT DATA is prohibited.

-

This document may be changed, in whole or in part for personal use. The creation of secondary work using this document is allowed. However, “Reference document: TERASOLUNA Batch Framework for Java (5.x) Development Guideline” or equivalent documents may be mentioned in created document and its duplicated copies.

-

The document and its duplicated copies created according to previous two clauses may be provided to third party only if these are free of cost.

-

Use of this document and its duplicated copies, and transfer of rights of this contract to a third party, in whole or in part, beyond the conditions specified in this contract, are prohibited without the written consent of NTT Data.

-

NTT DATA shall not bear any responsibility regarding correctness of contents of this document, warranty of fitness for usage purpose, assurance of accuracy and reliability of usage result, liability for defect warranty, and any damage incurred directly or indirectly.

-

NTT DATA does not guarantee the infringement of copyrights and any other rights of third party through this document. In addition to this, NTT DATA shall not bear any responsibility regarding any claim (including the claims occurred due to dispute with third party) occurred directly or indirectly due to infringement of copyright and other rights.

This document is created by referring to Macchinetta "Reference document:Macchinetta Batch Framework Development Guideline".

Registered trademarks or trademarks of company name, service name, and product name of their respective companies used in this document are as follows.

-

TERASOLUNA is a registered trademark of NTT DATA Corporation.

-

Macchinetta is the registered trademark of NTT.

-

All other company names and product names are the registered trademarks or trademarks of their respective companies.

1.1.1. Reference

1.1.1.1. Macchinetta-Terms of use

In order to use this document, you are required to agree to abide by the following terms. If you do not agree with the terms, you must immediately delete or destroy this document and all its duplicate copies.

-

Copyrights and all other rights of this document shall belong to Nippon Telegraph and Telephone Corporation (hereinafter referred to as "NTT") or third party possessing such rights.

-

This document may be reproduced, translated or adapted, in whole or in part for personal use. However, deletion of the terms given on this page and copyright notice of NTT is prohibited.

-

This document may be changed, in whole or in part for personal use. Creation of secondary work using this document is allowed. However, “Reference document: Macchinetta Batch Framework Development Guideline” or equivalent documents may be mentioned in created document and its duplicate copies.

-

Document and its duplicate copies created according to previous two clauses may be provided to third party only if these are free softwares.

-

Use of this document and its duplicate copies, and transfer of rights of this contract to a third party, in whole or in part, beyond the conditions specified in this contract, are prohibited without the written consent of NTT.

-

NTT shall not bear any responsibility regarding correctness of contents of this document, warranty of fitness for usage purpose, assurance for accuracy and reliability of usage result, liability for defect warranty, and any damage incurred directly or indirectly.

-

NTT does not guarantee the infringement of copyrights and any other rights of third party through this document. In addition to this, NTT shall not bear any responsibility regarding any claim (Including the claims occurred due to dispute with third party) occurred directly or indirectly due to infringement of copyright and other rights.

Registered trademarks or trademarks of company name and service name, and product name of their respective companies used in this document are as follows.

-

Macchinetta is the registered trademark of NTT.

-

All other company names and product names are the registered trademarks or trademarks of their respective companies.

1.2. Introduction

1.2.1. Objective of guideline

This guideline provides best practices to develop Batch applications with high maintainability, using full stack framework focusing on Spring Framework, Spring Batch and MyBatis.

This guideline helps smooth progress of software development (mainly coding).

1.2.2. Target readers

This guideline is written for architects and programmers having software development experience and knowledge of the following.

-

Basic knowledge of DI and AOP of Spring Framework

-

Application development experience using Java

-

Knowledge of SQL

-

Have experience of using Maven

This guideline is not for Java beginners.

Refer to Spring Framework Comprehension Check to assess whether you have the basic knowledge to understand the document. If you are unable to answer 40% of the Comprehension test questions, it is recommended to study separately using following books.

1.2.3. Structure of guideline

For the start, importantly, the guideline is regarded as a subset of TERASOLUNA Server Framework for Java (5.x) Development Guideline (hereafter, referred to as TERASOLUNA Server 5.x Development Guideline). By using TERASOLUNA Server 5.x Development Guideline, you can eliminate duplication in explanation and reduce the cost of learning as much as possible. Since TERASOLUNA Server 5.x Development Guideline is referenced everywhere, we would like you to proceed with the development by using both guides.

- TERASOLUNA Batch Framework for Java (5.x)concept

-

Explains the basic concept of batch processing and the basic concept of TERASOLUNA Batch Framework for Java (5.x) and the overview of Spring Batch.

- Flow of application development

-

Explains the knowledge and method to be kept in mind while developing an application using TERASOLUNA Batch Framework for Java (5.x).

- Running a Job

-

Explains how to running a job as Synchronous, Asynchronous and provide job parameters.

- Input/output of data

-

Explains how to provide Input/Output to various resources such as Database, File access etc.

- Handling abnormal cases

-

Explains how to handle the abnormal conditions like Input checks, Exceptions.

- Job management

-

Explains how to manage the Job execution.

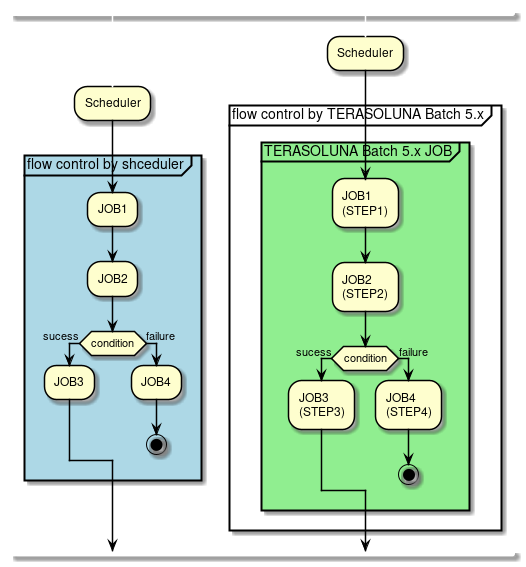

- Flow control and parallel/multiple processing

-

Explains the processing of parallel/multiple Job execution.

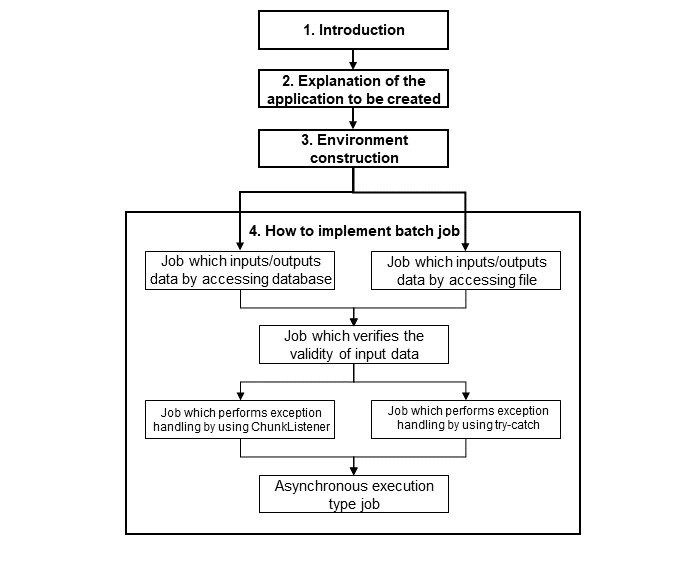

- Tutorial

-

Experience batch application development with TERASOLUNA Batch Framework for Java (5.x), through basic batch application development.

1.2.4. How to read guideline

It is strongly recommended for all the developers to read the following contents for using TERASOLUNA Batch Framework for Java (5.x).

The following contents are usually required to be read in advance. It is better to select according to the development target.

First refer to the following contents when proceeding with advanced implementation.

Developers who want to experience actual application development by using TERASOLUNA Batch Framework for Java (5.x) are recommended to read following contents. While experiencing TERASOLUNA Batch Framework for Java (5.x) for the first time, you should read these contents first and then move on to other contents.

1.2.4.1. Notations in guideline

This section describes the notations of this guideline.

- About Windows command prompt and Unix terminal

-

If Windows and Unix systems don’t work due to differences in notation, both are described. Otherwise, unified with Unix notation..

- Prompt sign

-

Describe as

$in Unix.

$ java -version- About defining properties and constructor of Bean definition

-

In this guideline, it is described by using namespace of

pandc. The use of namespace helps in simplifying and clarifying the description of Bean definition.

<bean class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<property name="lineTokenizer">

<bean class="org.terasoluna.batch.item.file.transform.FixedByteLengthLineTokenizer"

c:ranges="1-6, 7-10, 11-12, 13-22, 23-32"

c:charset="MS932"

p:names="branchId,year,month,customerId,amount"/>

</property>

</bean>For your reference, the description not using namespace is shown.

<bean class="org.springframework.batch.item.file.mapping.DefaultLineMapper">

<property name="lineTokenizer">

<bean class="org.terasoluna.batch.item.file.transform.FixedByteLengthLineTokenizer">

<constructor-arg index="0" value="1-6, 7-10, 11-12, 13-22, 23-32"/>

<constructor-arg index="1" value="MS932"/>

<property name="names" value="branchId,year,month,customerId,amount"/>

</property>

</bean>This guideline does not force the user to use a namespace. We would like to consider it for simplifying the explanation.

1.2.5. Tested environments of guideline

For tested environments of contents described in this guideline, refer to " Tested Environment".

1.3. Change Log

| Modified on | Modified locations | Modification details |

|---|---|---|

2018-3-16 |

- |

Released 5.1.1 RELEASE version |

General |

Description details modified Description details deleted |

|

Description details modified |

||

Description details deleted |

||

Description details modified Description details added |

||

Description details added |

||

Description details modified Description details added |

||

Description details modified |

||

Description details modified |

||

Description details added |

||

Description details modified |

||

Description details modified |

||

Description details modified |

||

2017-09-27 |

- |

Released 5.0.1 RELEASE version |

General |

Description details modified Description details added |

|

Description details added Description details deleted |

||

Description details modified |

||

Description details modified |

||

Description details modified Description details added |

||

Description details modified Description details added |

||

Description details modified |

||

Description details modified Description details added |

||

Description details modified Description details added |

||

Description details added |

||

Description details modified Description details added |

||

Description details modified |

||

Description details deleted |

||

Description details added |

||

Description details modified |

||

New chapter added |

||

2017-03-17 |

- |

Released |

2. TERASOLUNA Batch Framework for Java (5.x) concept

2.1. Batch Processing in General

2.1.1. Introduction to Batch Processing

The term of "Batch Processing" refers to the execution or the process of a series of jobs in a computer program without manual intervention (non-interactive).

It is often a process of reading, processing and writing a large number of records from a database or a file.

Batch processing is a processing method which prioritizes process throughput over responsiveness, as compared to online processing and consists of the following features.

-

Process data in a fixed amount.

-

Uninterruptible process is done in the certain time and fixed sequence.

-

Process runs in accordance with the schedule.

Objective of batch processing is given below.

- Enhanced throughput

-

Process throughput can be enhanced by processing the data sets collectively in a batch.

File or database does not input or output data one by one, and instead sums up data of a fixed quantity thus dramatically reducing overheads of waiting for I/O resulting in the increased efficiency. Even though the waiting period for I/O of a single record is insignificant, cumulative accumulation results in fatal delay while processing a large amount of data. - Ensuring responsiveness

-

Processes which are not required to be processed immediately are cut for batch processing in order to ensure responsiveness of online processing.

For example, when the process results are not required immediately, the processing is done until acceptance by online processing and, batch processing is performed in the background. The processing method is generally called "delayed processing". - Response to time and events

-

Processes corresponding to specific period and events are naturally implemented by batch processing.

For example, aggregating a month’s data on 1st weekend of next month according to business requirement,

taking a week’s backup of business data on Sunday at 2 a.m. in accordance with the system operation rules,

and so on. - Restriction for coordination with external system

-

Batch processing is also used due to restrictions of interface like files with interactions of external systems.

File sent from the external system is a summary of data collected for a certain period. Batch processing is better suited for the processes which incorporate these files, than the online processing.

It is very common to combine various techniques to achieve batch processing. Major techniques are introduced here.

- Job Scheduler

-

A single execution unit of a batch processing is called a job. A job scheduler is a middleware to manage this job.

A batch system rarely has several jobs, and usually the number of jobs can reach hundreds or even thousands at times. Hence, an exclusive system to define the relation with the job and manage execution schedule becomes indispensable. - Shell script

-

It is one of the methods to implement a job. A process is achieved by combining the commands implemented in OS and middleware.

Although the method can be implemented easily, it is not suitable for writing complex business logic. Hence, it is primarily used in simple processes like copying a file, backup, clearing a table etc. Further, shell script performs only the pre-start settings and post-execution processing while executing a process implemented in another programming language. - Programming language

-

It is one of the methods to implement a job. Structured code can be written rather than the shell script and is advantageous for securing development productivity, maintainability and quality. Hence, it is commonly used to implement business logic that processes data of file or database which tend to be relatively complex with logic.

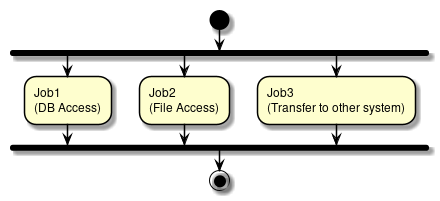

2.1.2. Requirements for batch processing

Requirements for batch processing in order to implement business process are given as below.

-

Performance improvement

-

A certain quantity of data can be processed in a batch.

-

Jobs can be executed in parallel/in multiple.

-

-

Recovery in case of an abnormality

-

Jobs can be reexecuted (manual/schedule).

-

At the time of reprocessing, it is possible to process only unprocessed records by skipping processed records.

-

-

Various activation methods for running jobs

-

Synchronous execution possible.

-

Asynchronous execution possible.

-

DB polling, HTTP requests can be used as opportunities for execution.

-

-

-

Various input and output interfaces

-

Database

-

File

-

Variable length like CSV or TSV

-

Fixed length

-

XML

-

-

Specific details for the above requirements are given below.

- A large amount of data can be efficiently processed using certain resources (Performance improvement)

-

Processing time is reduced by processing the data collectively. The important part here is "Certain resources" part.

Processing can be done by using a CPU and memory for 100 or even 1 million records and the processing time is ideally extended slowly and linearly according to the number of records. Transaction is started and terminated for certain number of records to perform a process collectively. The used resources must be levelled in order to perform I/O collectively.

If you still want to deal with enormous amounts of data that can not be handled, you will need to add a mechanism to move the hardware resources one step further to the limit. Data to be processed is divided into records or groups. Then, multiple processing is done by using multiple processes and multiple threads. Moving ahead, distributed processing using multiple machines is also implemented. When resources are used upto the limit, it becomes extremely important to reduce as much as possible. - Continue the processing as much as possible (Recovery at the time of occurrence of abnormality)

-

In processing large amounts of data, the countermeasures must be considered when an abnormality occurs in input data or system itself.

Large amounts of data inevitably take a long time to finish processing, but if the time to recover after the occurrence of error is prolonged, the system operation will be greatly affected.

For example, consider a data consisting of 1 billion records to be processed. Operation schedule would be obviously affected a great deal if error is detected in 999 millionth record and the processing so far is to be performed all over again.

To control this impact, process continuity unique to batch processing becomes very important. Hence a mechanism to process the next data while skipping error data, a mechanism to restart the process and a mechanism which attempts auto-recovery as much as possible and so on, becomes necessary. Further, it is important to simplify a job as much as possible and make re-execution easier. - Can be executed flexibly according to triggers of execution (various activation methods)

-

In case of time triggered, a mechanism to deal with various execution opportunities such as when triggered by online and external system cooperation is necessary. Various systems are widely known such as synchronous processing wherein processing starts when the job scheduler reaches scheduled time, asynchronous processing wherein the process is kept resident and batch processing is performed as per the events.

- Handles various input and output interfaces (Various input output interfaces)

-

It is important to handle various files like CSV/XML as well as databases for linking online and external systems. Further, if a method which transparently handles respective input and output method exists, implementation becomes easier and to deal with various formats becomes more quickly.

2.1.3. Rules and precautions to be considered in batch processing

Important rules while building a batch processing system and a few considerations are shown.

-

Simplify unit batch processing as much as possible and avoid complex logical structures.

-

Keep process and data in physical proximity (Save data at the location where process is executed).

-

Minimise the use of system resources (especially I/O) and execute operations in in-memory as much as possible.

-

Further, review I/O of application (SQL etc) to avoid unnecessary physical I/O.

-

Do not repeat the same process for multiple jobs.

-

For example, in case of counting and reporting process, avoid repetition of counting process during reporting process.

-

-

Always assume the worst situation related to data consistency. Verify data to check and to maintain consistency.

-

Review backups carefully. The difficulty level of backup will be high especially when the system is operational seven days a week.

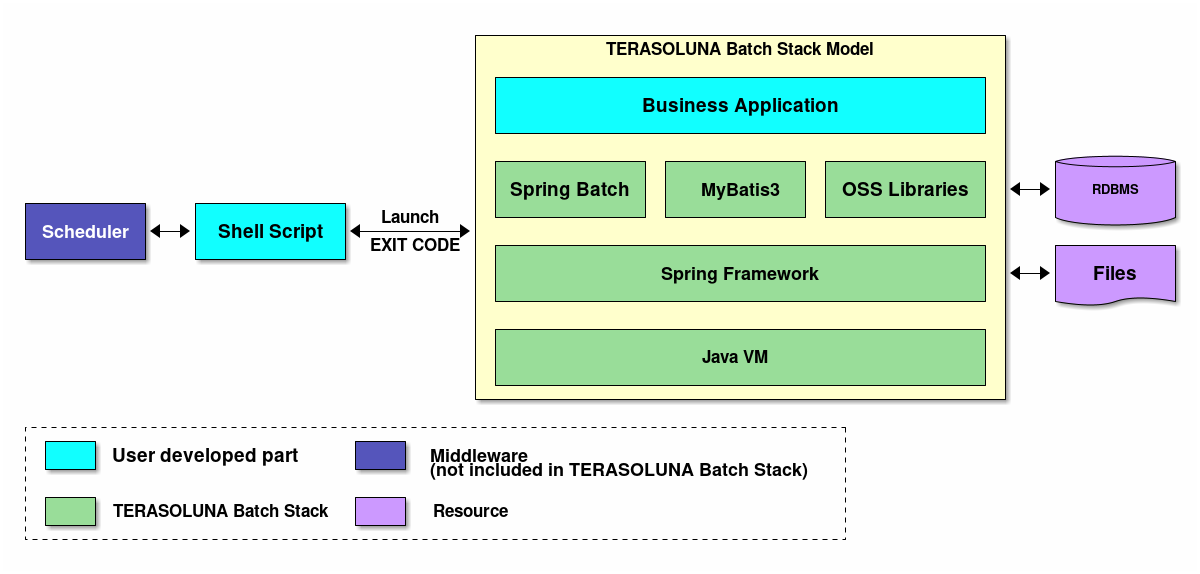

2.2. TERASOLUNA Batch Framework for Java (5.x) stack

2.2.1. Overview

Explains the TERASOLUNA Batch Framework for Java (5.x)configuration and shows the scope of responsibility of TERASOLUNA Batch Framework for Java (5.x).

2.2.2. TERASOLUNA Batch Framework for Java (5.x) stack

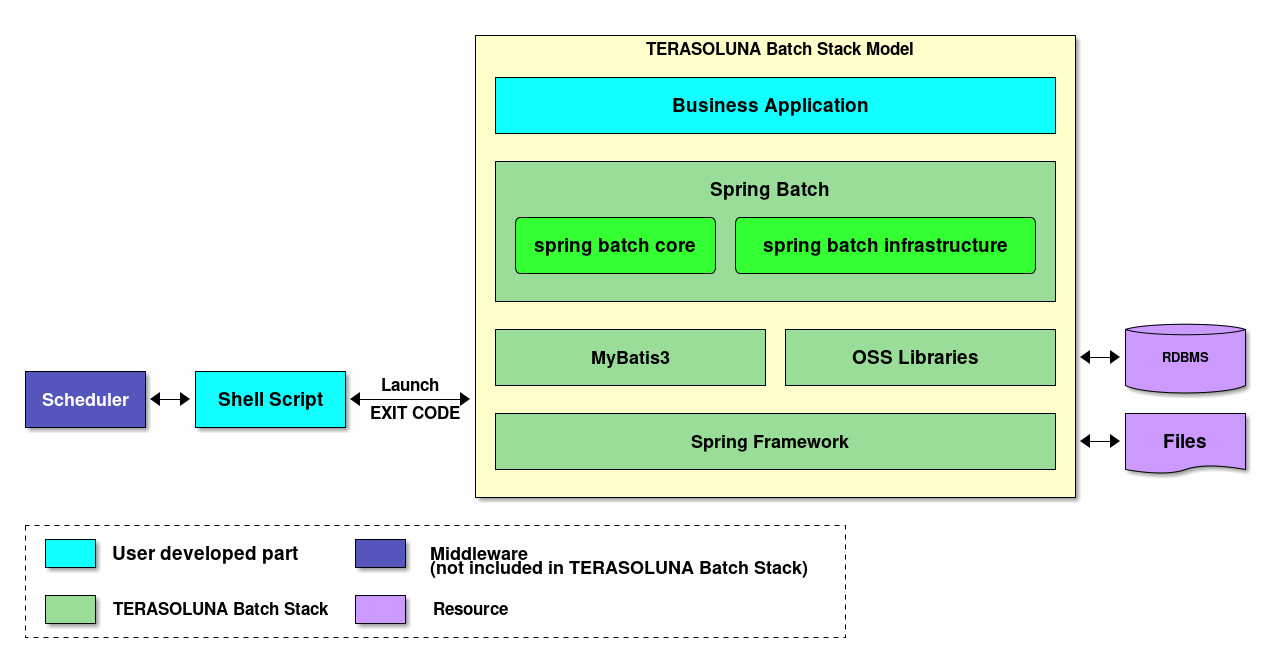

Software Framework used in the TERASOLUNA Batch Framework for Java (5.x) is a combination of OSS focusing on Spring Framework (Spring Batch) A stack schematic diagram of the TERASOLUNA Batch Framework for Java (5.x) is shown below.

Descriptions for products like job scheduler and database are excluded from this guideline.

2.2.2.1. OSS version to be used

List of OSS versions to be used in 5.1.1.RELEASE of TERASOLUNA Batch Framework for Java (5.x) is given below.

|

As a rule, OSS version to be used in the TERASOLUNA Batch Framework for Java (5.x) conforms to the definition of the Spring IO platform.

Note that, the version of Spring IO platform in 5.1.1.RELEASE is

Brussels-SR5. |

| Type | GroupId | ArtifactId | Version | Spring IO platform | Remarks |

|---|---|---|---|---|---|

Spring |

org.springframework |

spring-aop |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-beans |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-context |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-expression |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-core |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-tx |

4.3.14.RELEASE |

*2 |

|

Spring |

org.springframework |

spring-jdbc |

4.3.14.RELEASE |

*2 |

|

Spring Batch |

org.springframework.batch |

spring-batch-core |

3.0.8.RELEASE |

* |

|

Spring Batch |

org.springframework.batch |

spring-batch-infrastructure |

3.0.8.RELEASE |

* |

|

Spring Retry |

org.springframework.retry |

spring-retry |

1.2.1.RELEASE |

* |

|

Java Batch |

javax.batch |

javax.batch-api |

1.0.1 |

* |

|

Java Batch |

com.ibm.jbatch |

com.ibm.jbatch-tck-spi |

1.0 |

* |

|

MyBatis3 |

org.mybatis |

mybatis |

3.4.5 |

||

MyBatis3 |

org.mybatis |

mybatis-spring |

1.3.1 |

||

MyBatis3 |

org.mybatis |

mybatis-typehandlers-jsr310 |

1.0.2 |

||

DI |

javax.inject |

javax.inject |

1 |

* |

|

Log output |

ch.qos.logback |

logback-classic |

1.1.11 |

* |

|

Log output |

ch.qos.logback |

logback-core |

1.1.11 |

* |

*1 |

Log output |

org.slf4j |

jcl-over-slf4j |

1.7.25 |

* |

|

Log output |

org.slf4j |

slf4j-api |

1.7.25 |

* |

|

Input check |

javax.validation |

validation-api |

1.1.0.Final |

* |

|

Input check |

org.hibernate |

hibernate-validator |

5.3.5.Final |

* |

|

Input check |

org.jboss.logging |

jboss-logging |

3.3.1.Final |

* |

*1 |

Input check |

com.fasterxml |

classmate |

1.3.4 |

* |

*1 |

Connection pool |

org.apache.commons |

commons-dbcp2 |

2.1.1 |

* |

|

Connection pool |

org.apache.commons |

commons-pool2 |

2.4.2 |

* |

|

Expression Language |

org.glassfish |

javax.el |

3.0.0 |

* |

|

In-memory database |

com.h2database |

h2 |

1.4.193 |

* |

|

XML |

com.thoughtworks.xstream |

xstream |

1.4.10 |

* |

*1 |

JSON |

org.codehaus.jettison |

jettison |

1.2 |

* |

*1 |

-

Libraries on which the libraries supported by the Spring IO platform depend independently

-

Set a version different from Spring IO platform version to handle vulnerability

|

Regarding standard error output of xstream

When xstream version is 1.4.10, Bean definition is read by Spring Batch and the following message is output in standard error output while executing job. Currently, XStream security settings cannot be done in Spring Batch so message output cannot be controlled. When using XStream other than importing the developed job Bean definition (such as data linkage), do not load with XStream except for trusted source XML. |

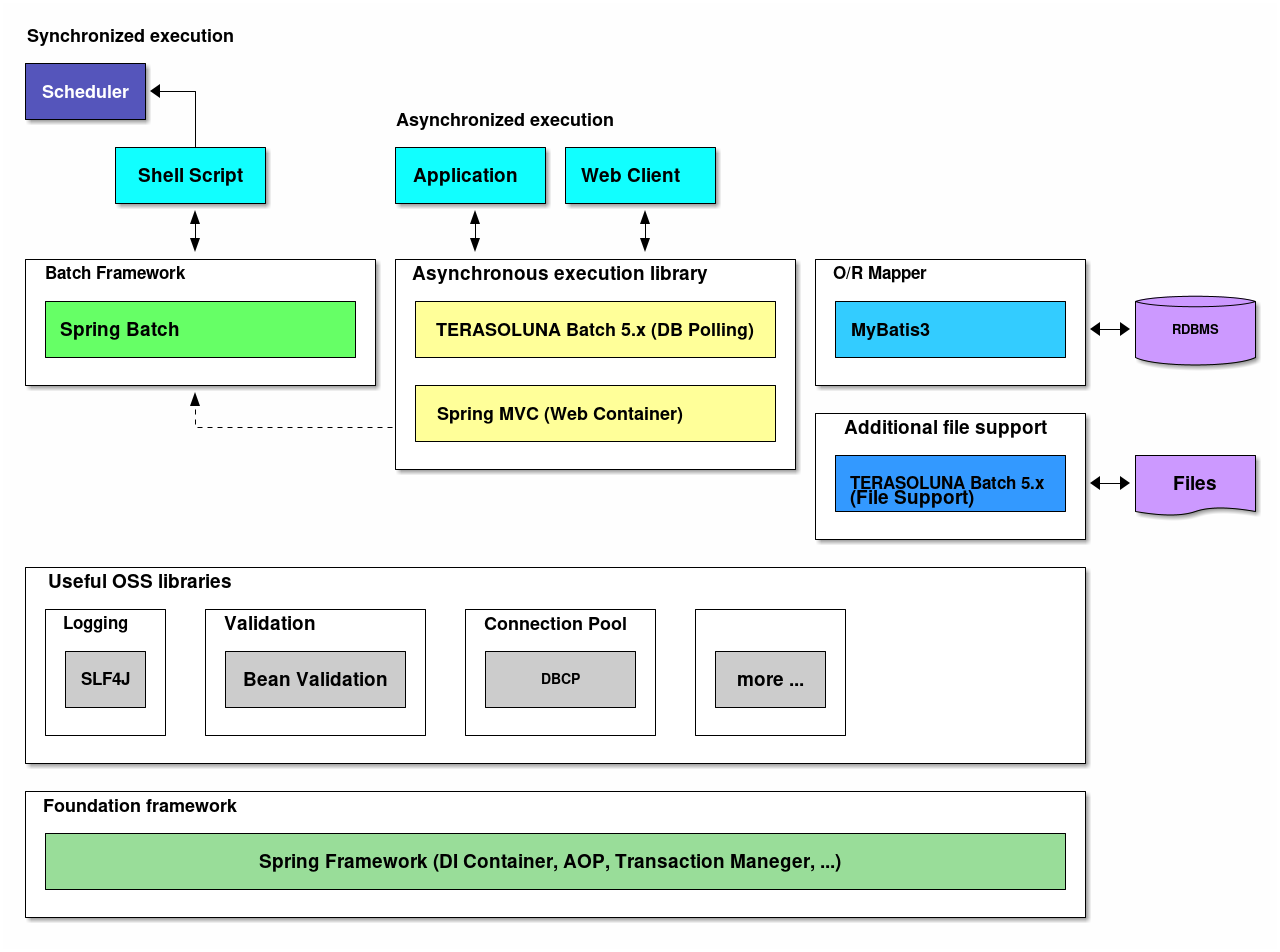

2.2.3. Structural elements of TERASOLUNA Batch Framework for Java (5.x)

Software Framework structural elements of the TERASOLUNA Batch Framework for Java (5.x) are explained.

Overview of each element is shown below.

- Foundation framework

-

Spring Framework is used as a framework foundation. Various functions are applied starting with DI container.

- Batch framework

-

Spring Batch is used as a batch framework.

- Asynchronous execution

-

Following functions are used as a method to execute asynchronous execution.

- Periodic activation by using DB polling

-

A library offered by TERASOLUNA Batch Framework for Java (5.x) is used.

- Web container activation

-

Link with Spring Batch using Spring MVC.

- O/R Mapper

-

Use MyBatis, and use MyBatis-Spring as a library to coordinate with Spring Framework.

- File access

-

In addition to Function offered from Spring Batch, TERASOLUNA Batch Framework for Java (5.x) is used as an auxiiliary function.

- Logging

-

Logger uses SLF4J in API and Logback in the implementation.

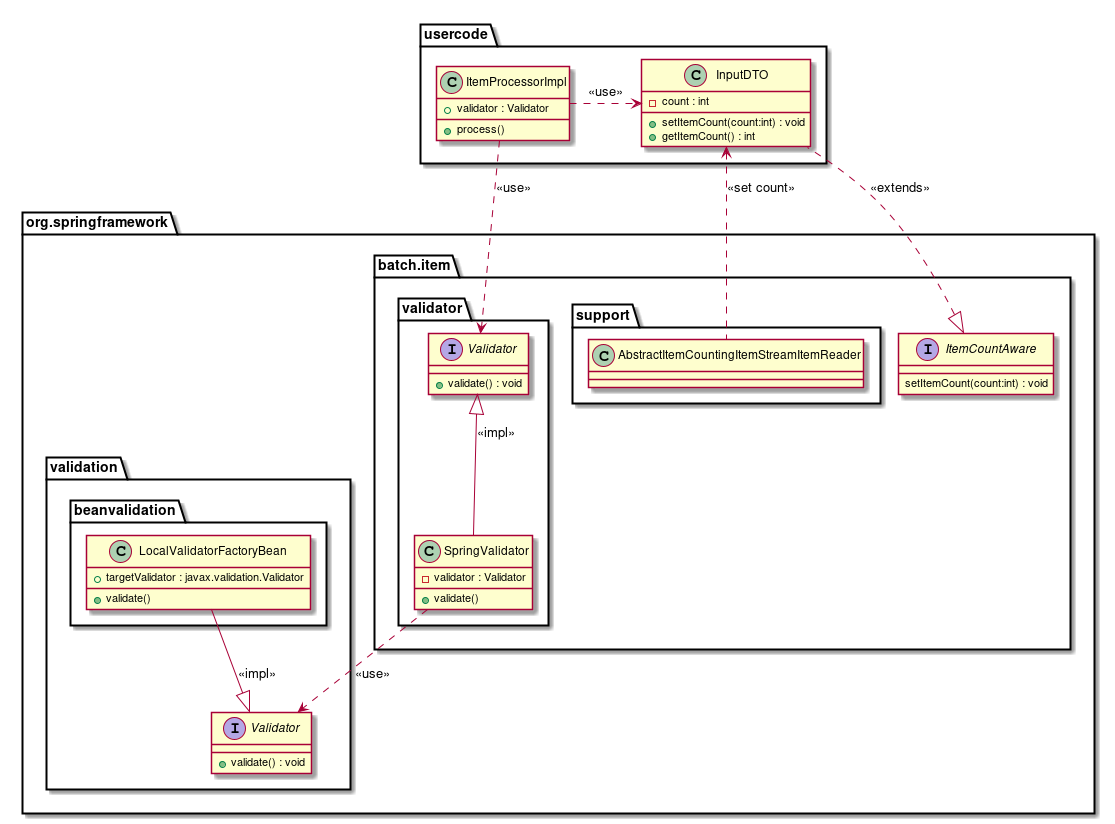

- Validation

-

- Unit item check

-

Bean Validation is used in unit item check and Hibernate Validator is used for implementation.

- Correlation check

-

Bean Validation or Spring Validation is used for correlation check.

- Connection pool

-

DBCP is used in the connection pool.

A function wherein TERASOLUNA Batch Framework for Java (5.x) provides implementation

A function, wherein TERASOLUNA Batch Framework for Java (5.x) provides implementation is given below.

Function name |

Overview |

Asynchronous execution using DB polling is implemented. |

|

Read fixed-length file without line breaks by number of bytes. |

|

Break down a fixed length record in individual field by number of bytes. |

|

Control output of enclosed characters by variable length records. |

2.3. Spring Batch Architecture

2.3.1. Overview

Spring Batch architecture acting as a base for TERASOLUNA Server Framework for Java (5.x) is explained.

2.3.1.1. What is Spring Batch

Spring Batch, as the name implies is a batch application framework. Following functions are offered based on DI container of Spring, AOP and transaction control function.

- Functions to standardize process flow

-

- Tasket model

-

- Simple process

-

It is a method to freely describe a process. It is used in a simple cases like issuing SQL once, issuing a command etc and the complex cases like performing processing while accessing multiple database or files, which are difficult to standardize.

- Chunk model

-

- Efficient processing of large amount of data

-

A method to collectively input/process/output a fixed amount of data. Job can be implemented simply by standardizing the flow of processing such as input / processing / output of data and implementing a part.

- Various activation methods

-

Execution is achieved by various triggers like command line execution, execution on Servlet and other triggers.

- I/O of various data formats

-

Input and output for various data resources like file, database, message queue etc can be performed easily.

- Efficient processing

-

Multiple execution, parallel execution, conditional branching are done based on the settings.

- Job execution control

-

Permanence of execution status, restart based on the number of data items, and so on can be possible.

2.3.1.2. Hello, Spring Batch!

If Spring Batch is not covered in understanding of Spring Batch architecture so far, the official documentation given below should be read. We would like you to get used to Spring Batch through creating simple application.

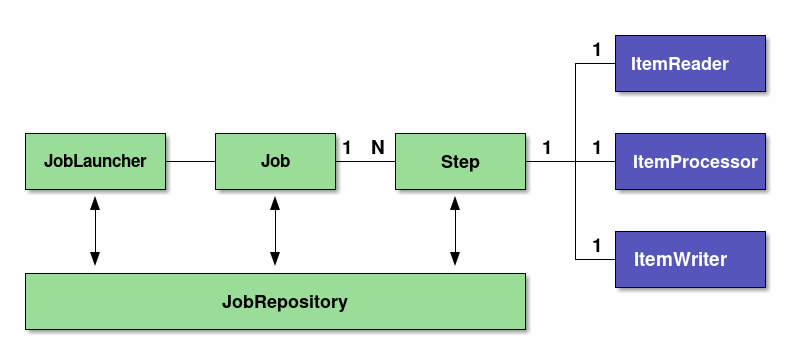

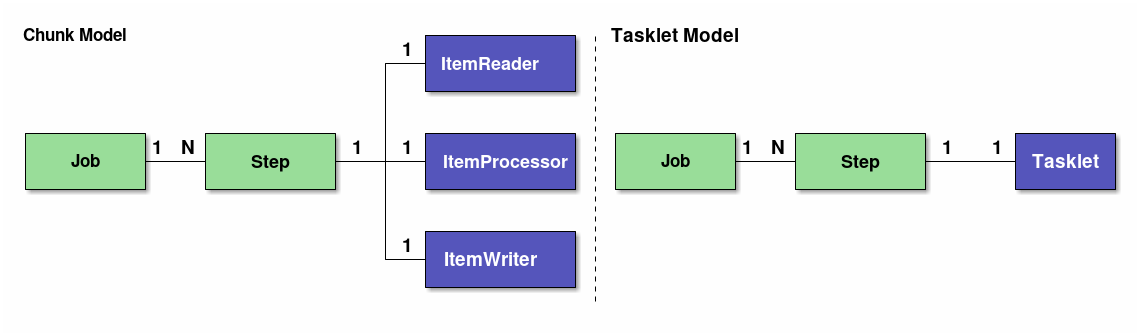

2.3.1.3. Basic structure of Spring Batch

Basic structure of Spring Batch is explained.

Spring Batch defines structure of batch process. It is recommended to perform development after understanding the structure.

| Components | Roles |

|---|---|

Job |

A single execution unit that summarises a series of processes for batch application in Spring Batch. |

Step |

A unit of processing which constitutes Job. 1 job can contain 1~N steps |

JobLauncher |

An interface for running a Job. |

ItemReader |

When implementing the chunk model, it is an interface for dividing it into three pieces of data input / processing / output. In Tasket model, ItemReader/ItemProcessor/ItemWriter substitutes a single Tasklet interface implementation. Input-Output, Input check and business logic all must be implemented in Tasklet. |

JobRepository |

A mechanism for managing the status of Job and Step. The management information is persisted on the database based on the table schema specified by Spring Batch. |

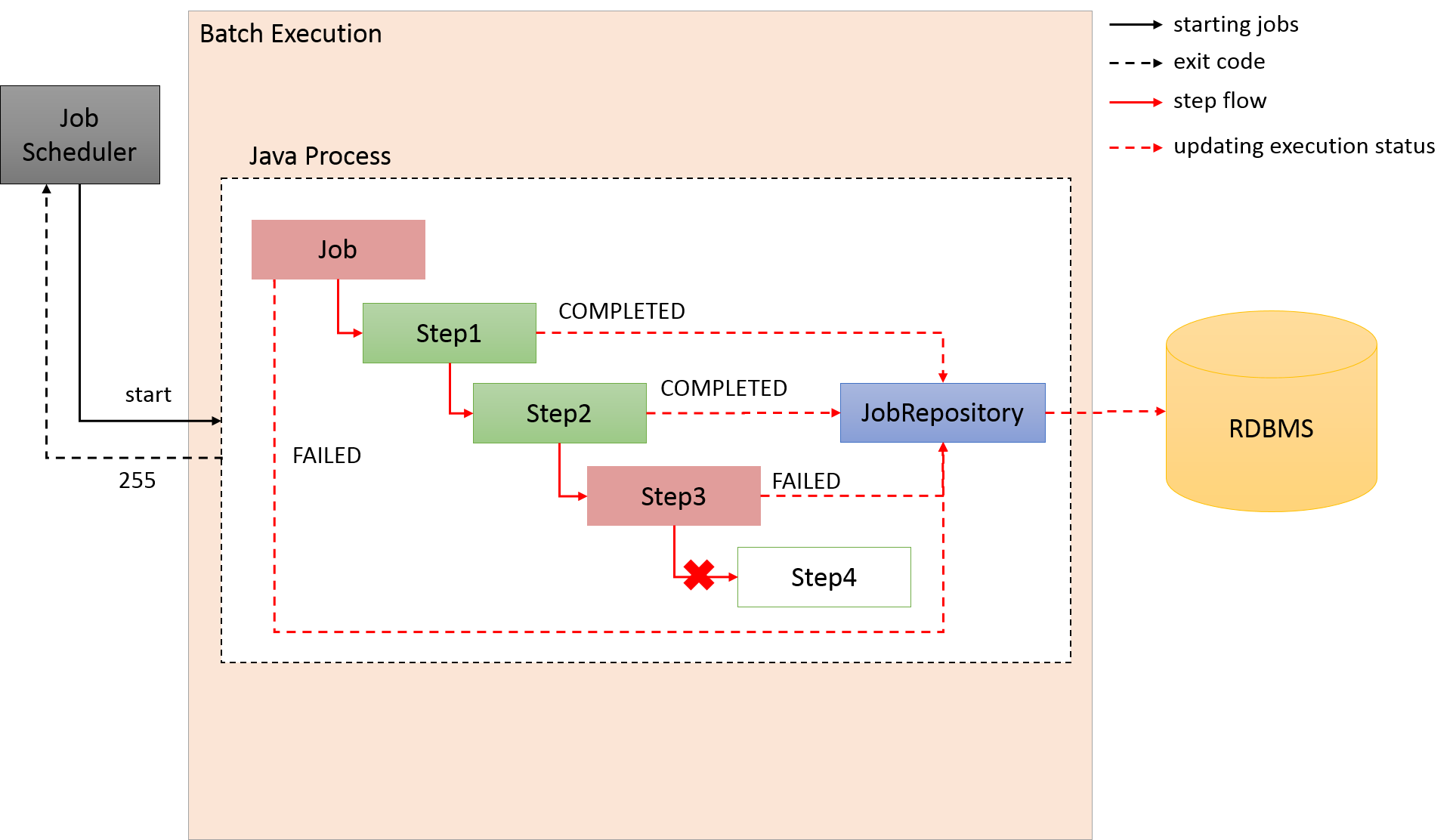

2.3.2. Architecture

Basic structure of Spring Batch is briefly explained in Overview.

Following points are explained on this basis.

In the end, performance tuning points of batch application which use Spring Batch are explained.

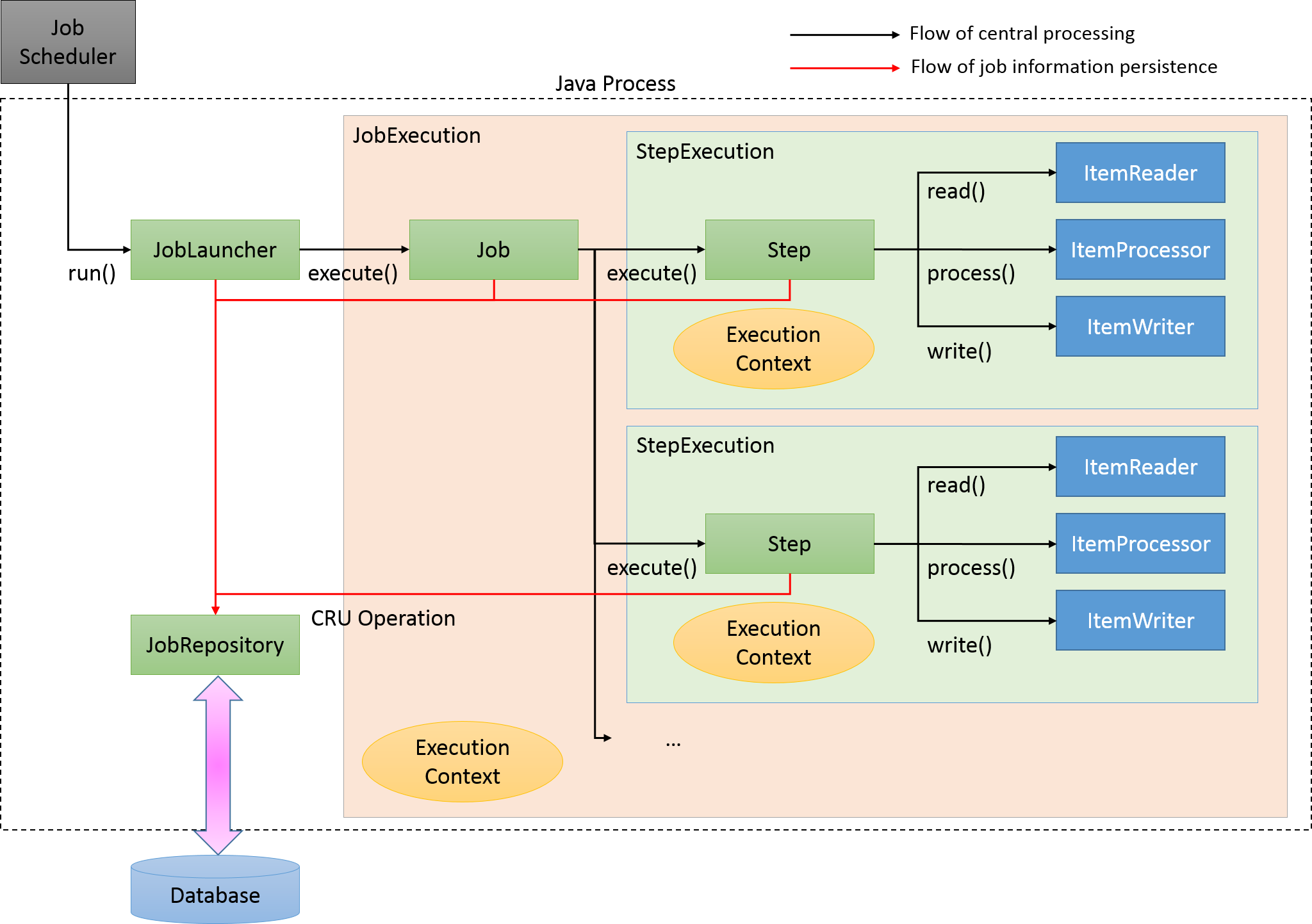

2.3.2.1. Overall process flow

Primary components of Spring Batch and overall process flow is explained. Further, explanation is also given about how to manage meta data of execution status of jobs.

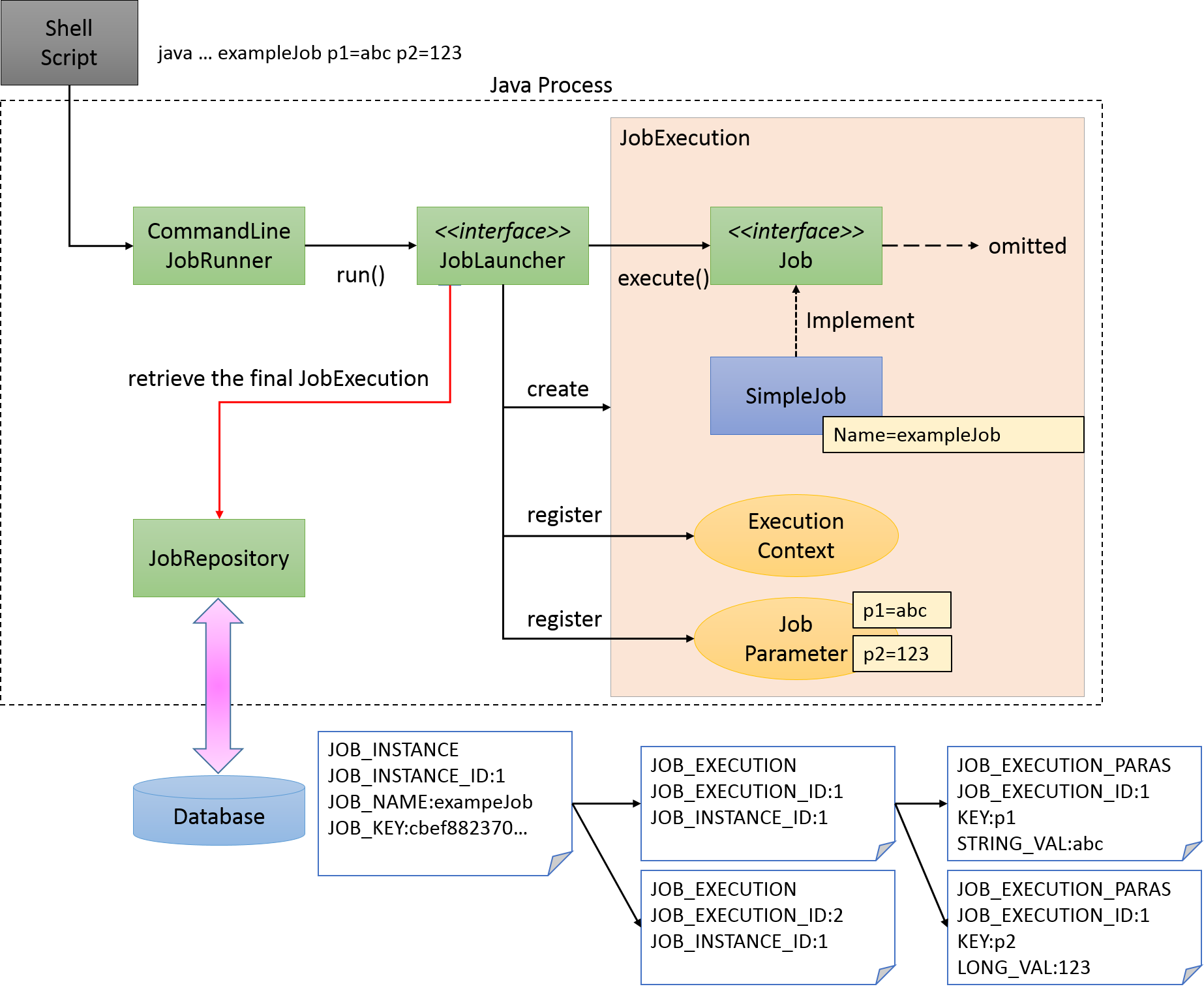

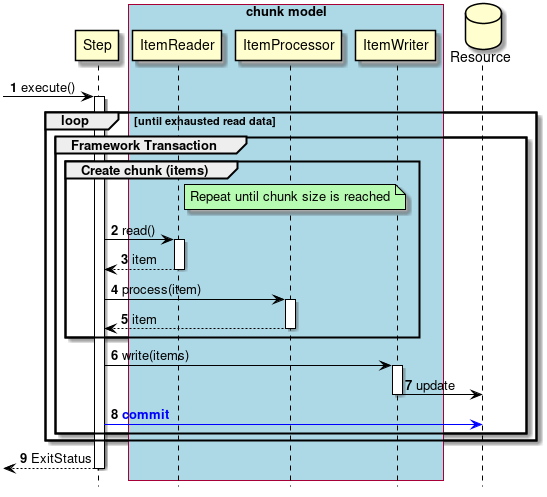

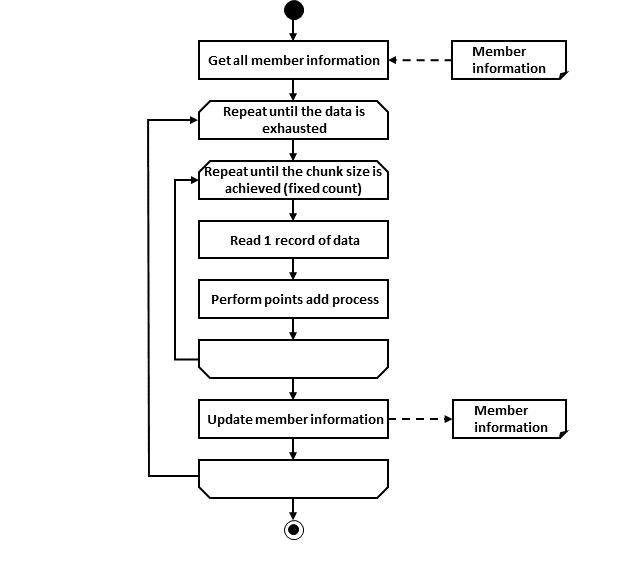

Primary components of Spring Batch and overall process flow (chunk model) are shown in the figure below.

Main processing flow (black line) and the flow which persists job information (red line) are explained.

-

JobLauncher is initiated from the job scheduler.

-

Job is executed from JobLauncher.

-

Step is executed from Job.

-

Step fetches input data by using ItemReader.

-

Step processes input data by using ItemProcessor.

-

Step outputs processed data by using ItemWriter.

-

JobLauncher registers JobInstance in Database through JobRepository.

-

JobLauncher registers that Job execution has started in Database through JobRepository.

-

JobStep updates miscellaneous information like counts of I/O records and status in Database through JobRepository.

-

JobLauncher registers that Job execution has completed in Database through JobRepository.

Description of JobRepository focusing on components and persistence is shown as follows.

| Components | Roles |

|---|---|

JobInstance |

Spring Batch indicates "logical" execution of a Job. JobInstance is identified by Job name and arguments.

In other words, execution with identical Job name and argument is identified as execution of identical JobInstance and Job is executed as a continuation from previous activation. |

JobExecution |

JobExecution indicates "physical" execution of Job. Unlike JobInstance, it is termed as another JobExecution even while re-executing identical Job. As a result, JobInstance and JobExecution shows one-to-many relationship. |

StepExecution |

StepExecution indicates "physical" execution of Step. JobExecution and StepExecution shows one-to-many relationship. |

JobRepository |

A function to manage and persist data for managing execution results and status of batch application like JobExecution or StepExecution is provided. |

The reason why Spring Batch is heavily managing metadata is to realize re-execution. In order to make batch processing re-executable, it is necessary to keep the snapshot at the last execution, the metadata and JobRepository are the basis for that.

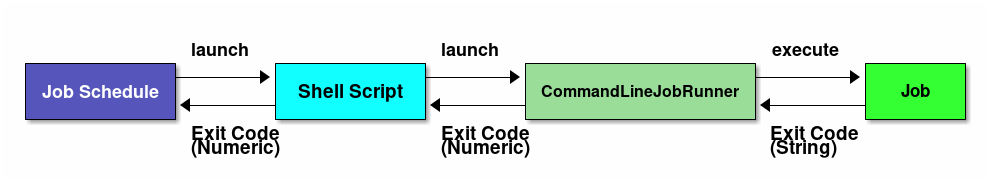

2.3.2.2. Running a Job

How to run a Job is explained.

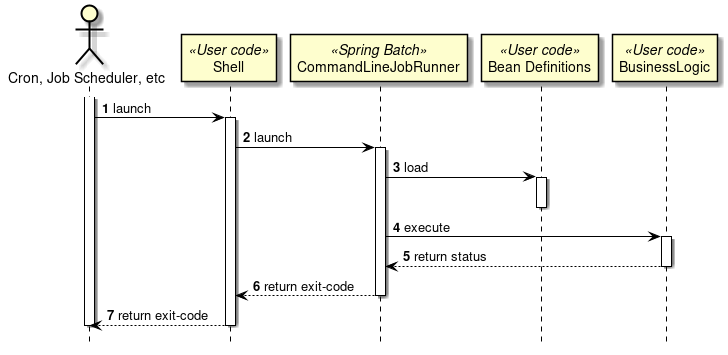

A scenario is considered wherein a batch process is started immediately after starting Java process and Java process is terminated after batch processing is completed. Figure below shows a process flow from starting a Java process till starting a batch process.

At the same time as the Java process is started, it is common to describe shell script that starts Java to start the Job defined on Spring Batch. When CommandLineJobRunner offered by Spring Batch is used, Job on Spring Batch defined by the user can be easily started.

The start command of the job which uses CommandLineJobRunner is shown as below.

java -cp ${CLASSPATH} org.springframework.batch.core.launch.support.CommandLineJobRunner <jobPath> <jobName> <JobArgumentName1>=<value1> <JobArgumentName2>=<value2> ...CommandLineJobRunner can pass arguments (job parameters) as well along with Job name to be started.

Arguments are specified in <Job argument name>=<Value> format as per the example described earlier.

All arguments are interpreted and checked by CommandLineJobRunner or JobLauncher, stored in JobExecution after conversion to JobParameters.

For details, refer to startup parameters of Job.

JobLauncher fetches Job name from JobRepository and JobInstance matching with the argument from the database.

-

When corresponding JobInstance does not exist, JobInstance is registered as new.

-

When corresponding JobInstance exists, the associated JobExecution is restored.

-

Spring Batch has adopted a method of adding arguments for JobInstance only to make it unique, for the jobs that may run repeatedly, such as daily execution. For example, adding system date or random number to arguments are listed.

For the method recommended in this guideline, refer parameter conversion class.

-

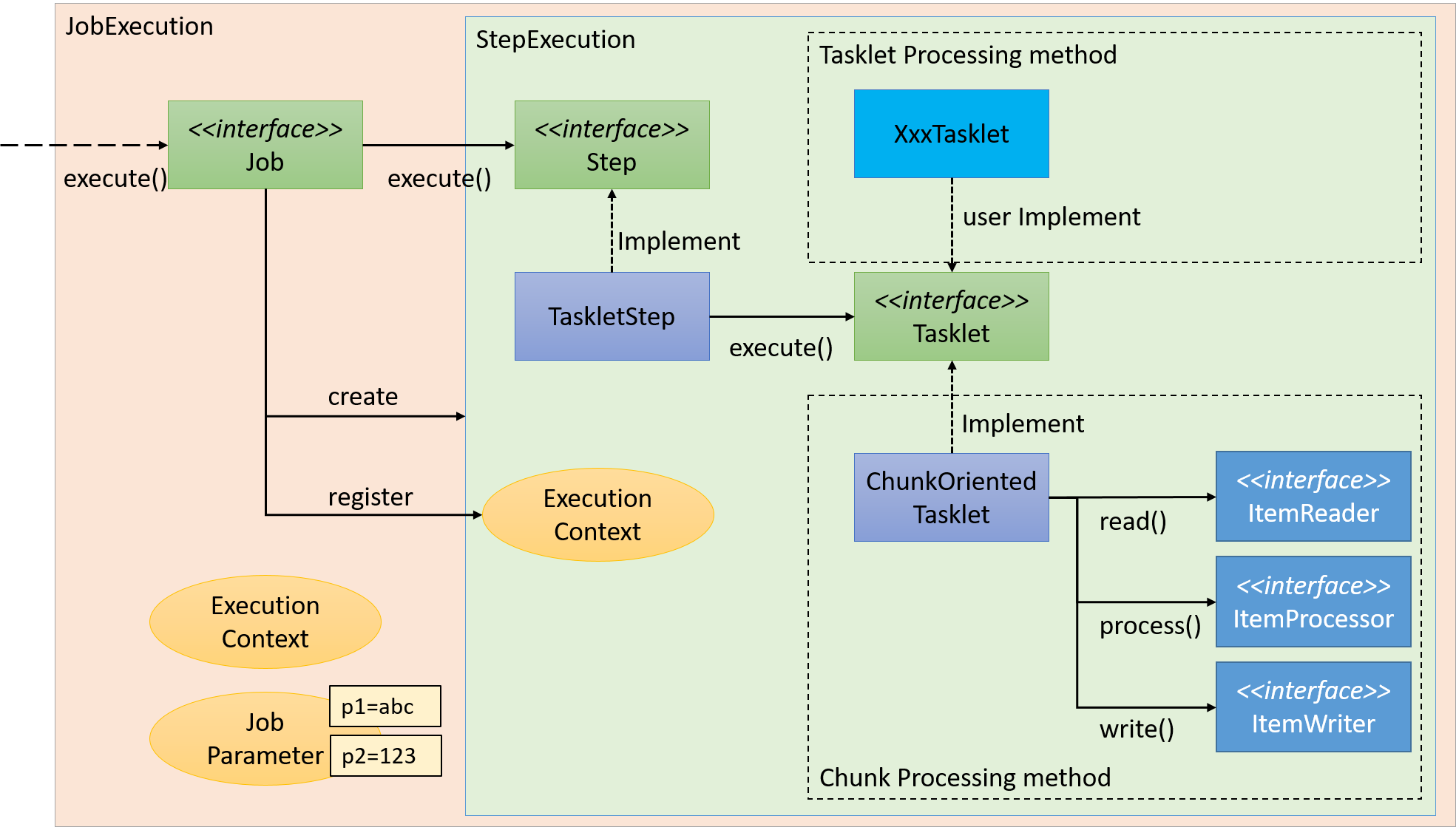

2.3.2.3. Execution of business logic

Job is divided into smaller units called steps in Spring Batch. When Job is started, Job activates already registered steps and generates StepExecution. Step is a framework for dividing the process till the end and execution of business logic is delegated to Tasket called from Step.

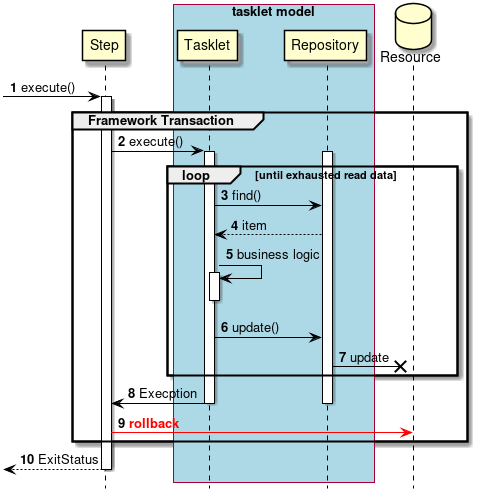

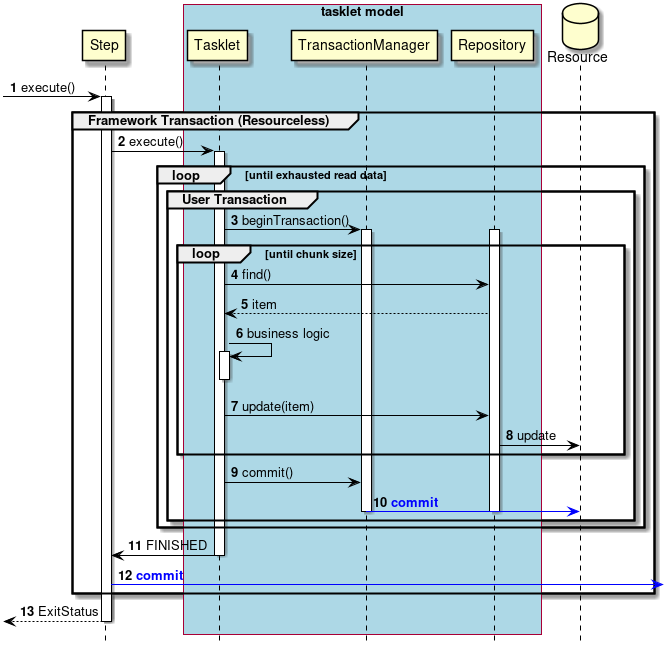

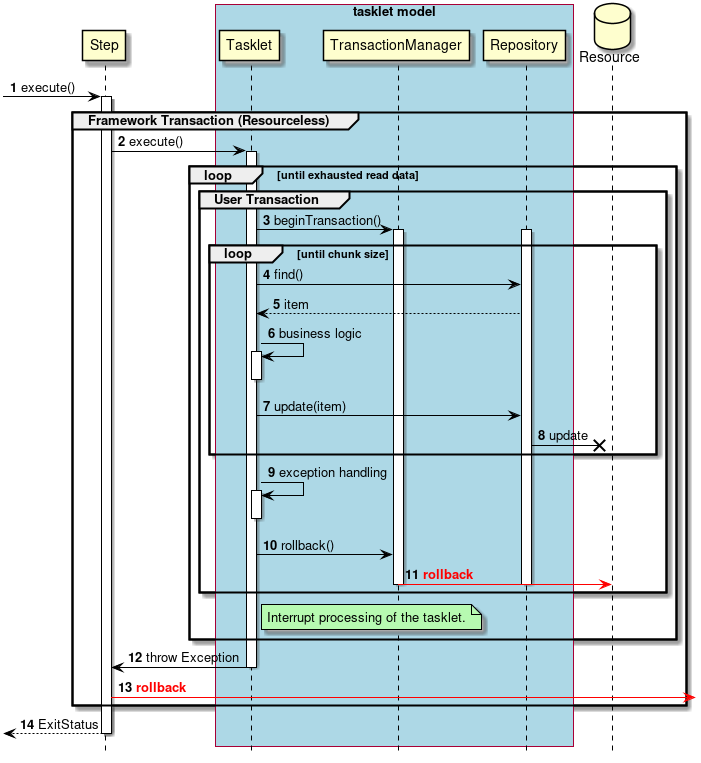

Flow from Step to Tasklet is shown below.

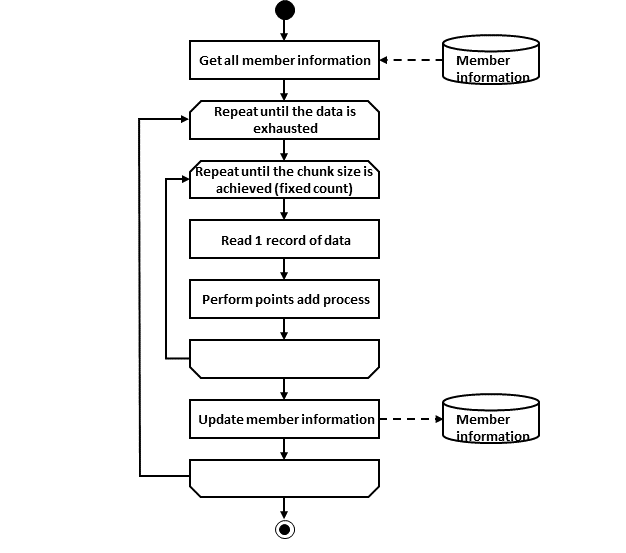

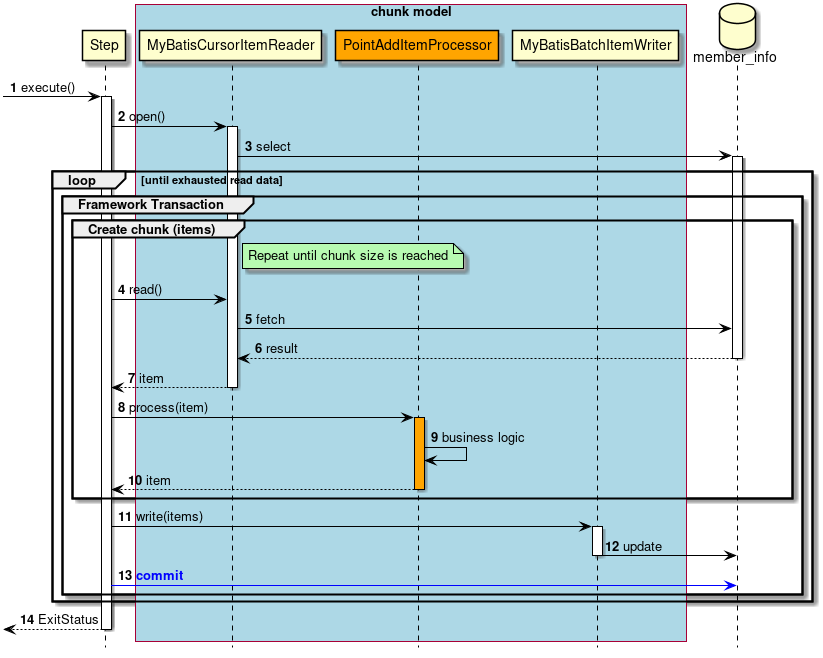

A couple of methods can be listed as the implementation methods of Tasklet - "Chunk model" and "Tasket model". Since the overview has already been explained, the structure will be now explained here.

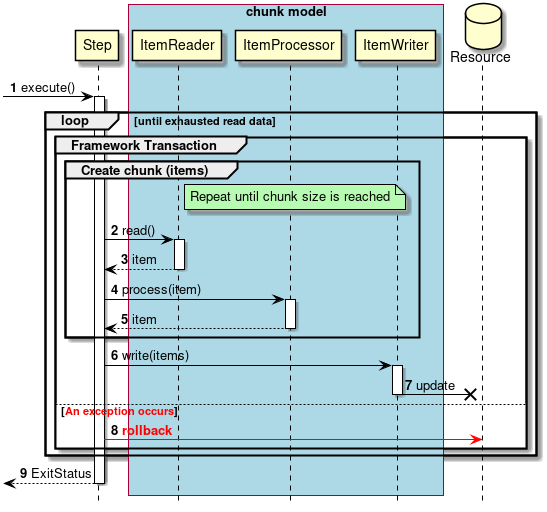

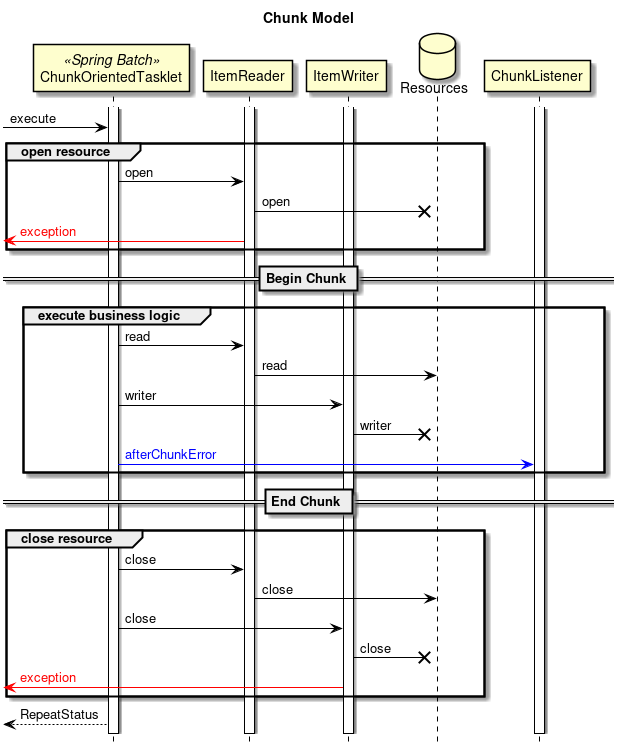

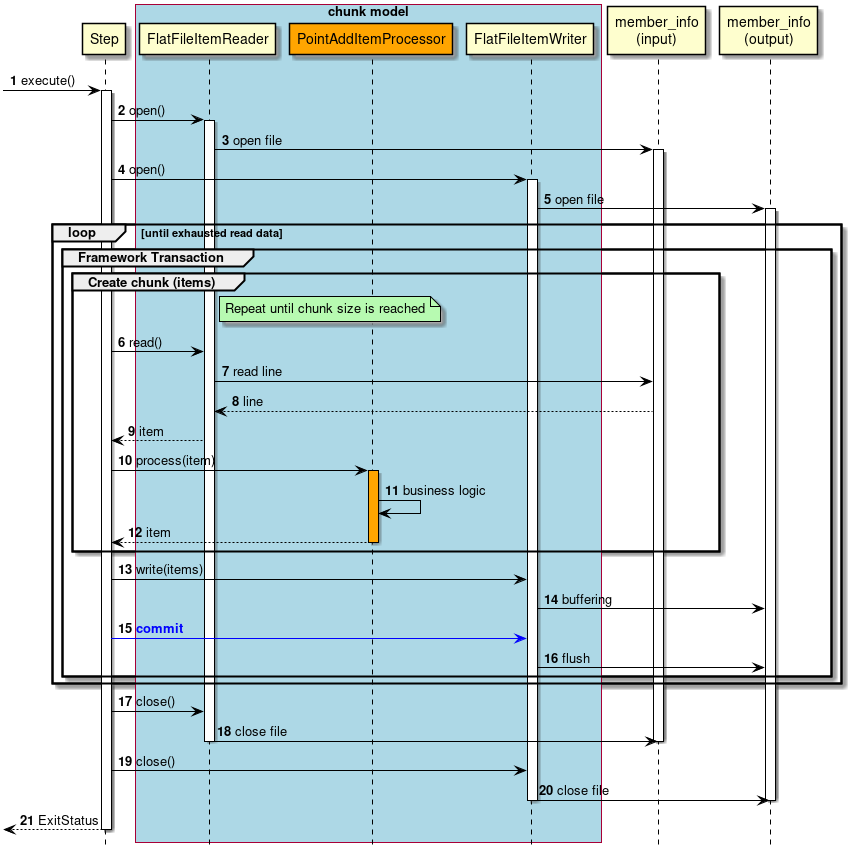

2.3.2.3.1. Chunk model

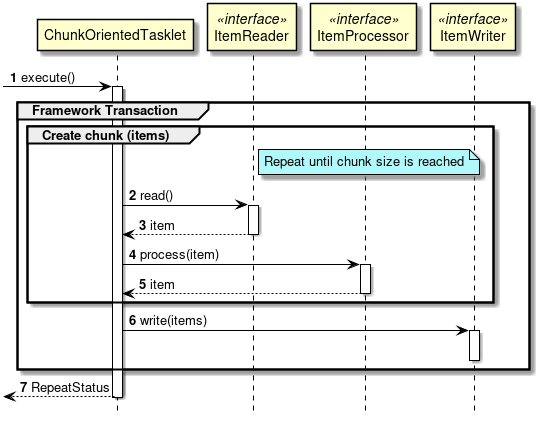

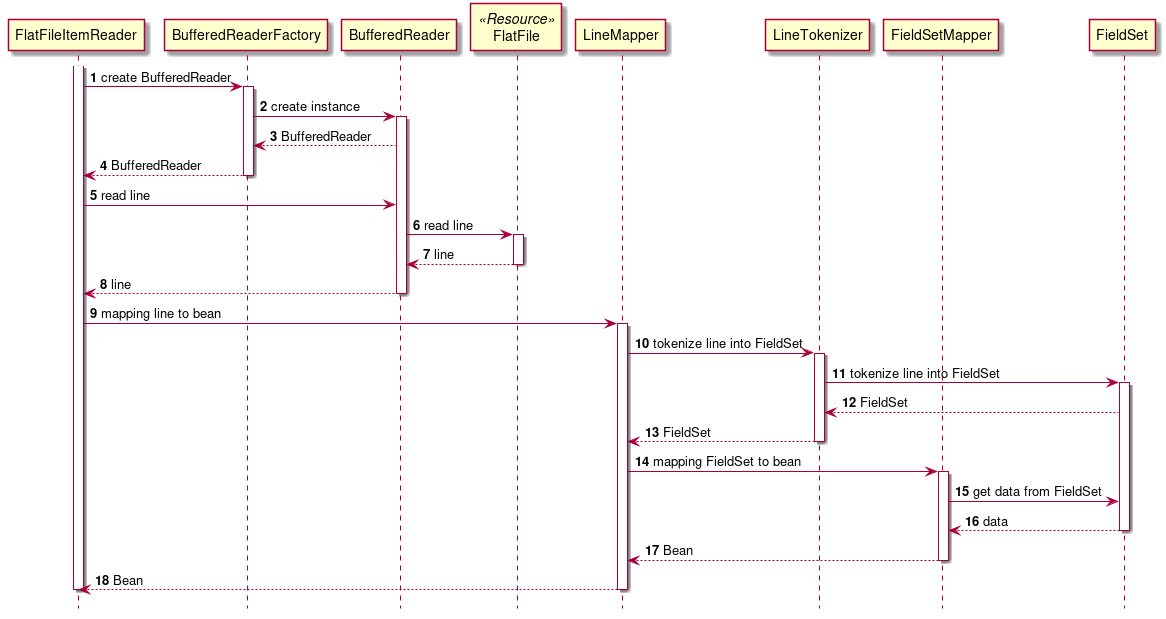

As described above, chunk model is a method wherein the processing is performed in a certain number of units (chunks) rather than processing the data to be processed one by one unit. ChunkOrientedTasklet acts as a concrete class of Tasklet which supports the chunk processing. Maximum records of data to be included in the chunk (hereafter referred as "chunk size") can be adjusted by using setup value called commit-interval of this class. ItemReader, ItemProcessor and ItemWriter are all the interfaces based on chunk processing.

Next, explanation is given about how ChunkOrientedTasklet calls the ItemReader, ItemProcessor and ItemWriter.

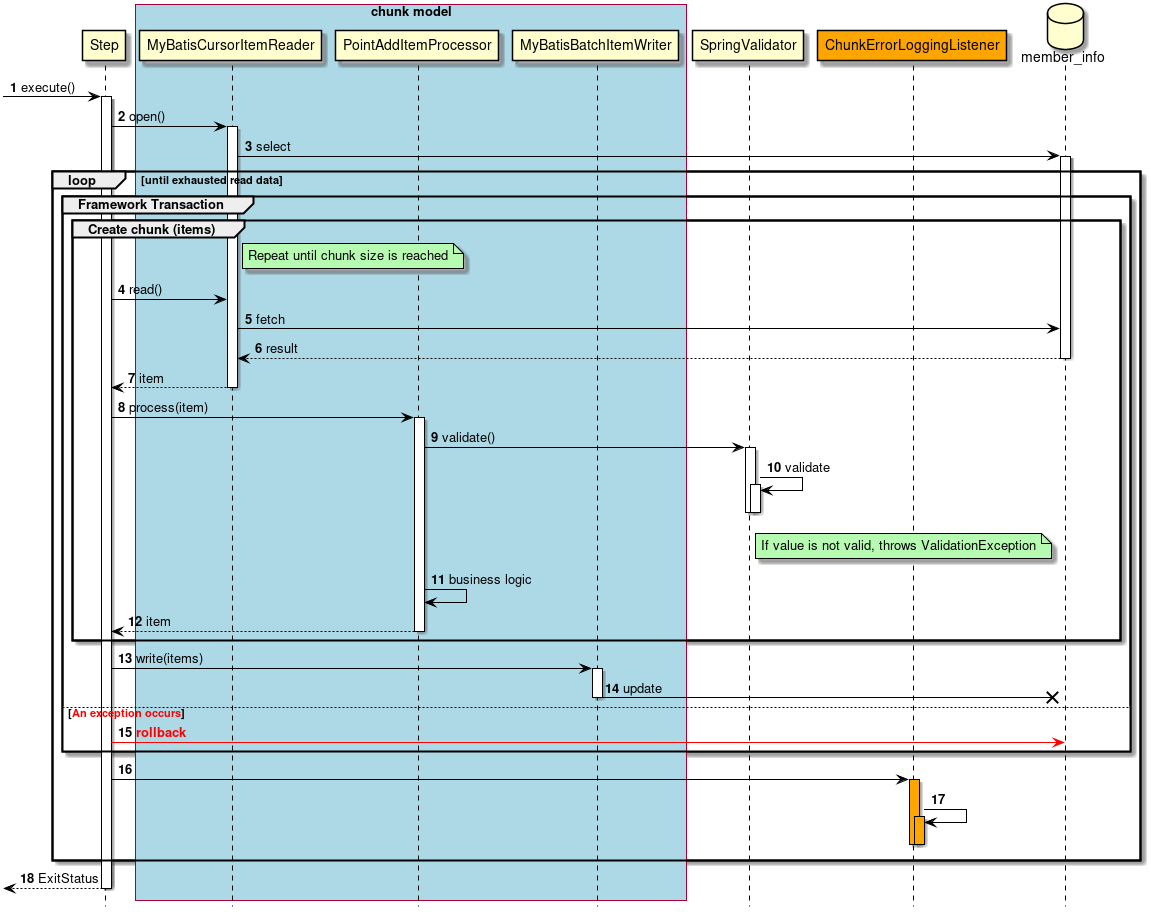

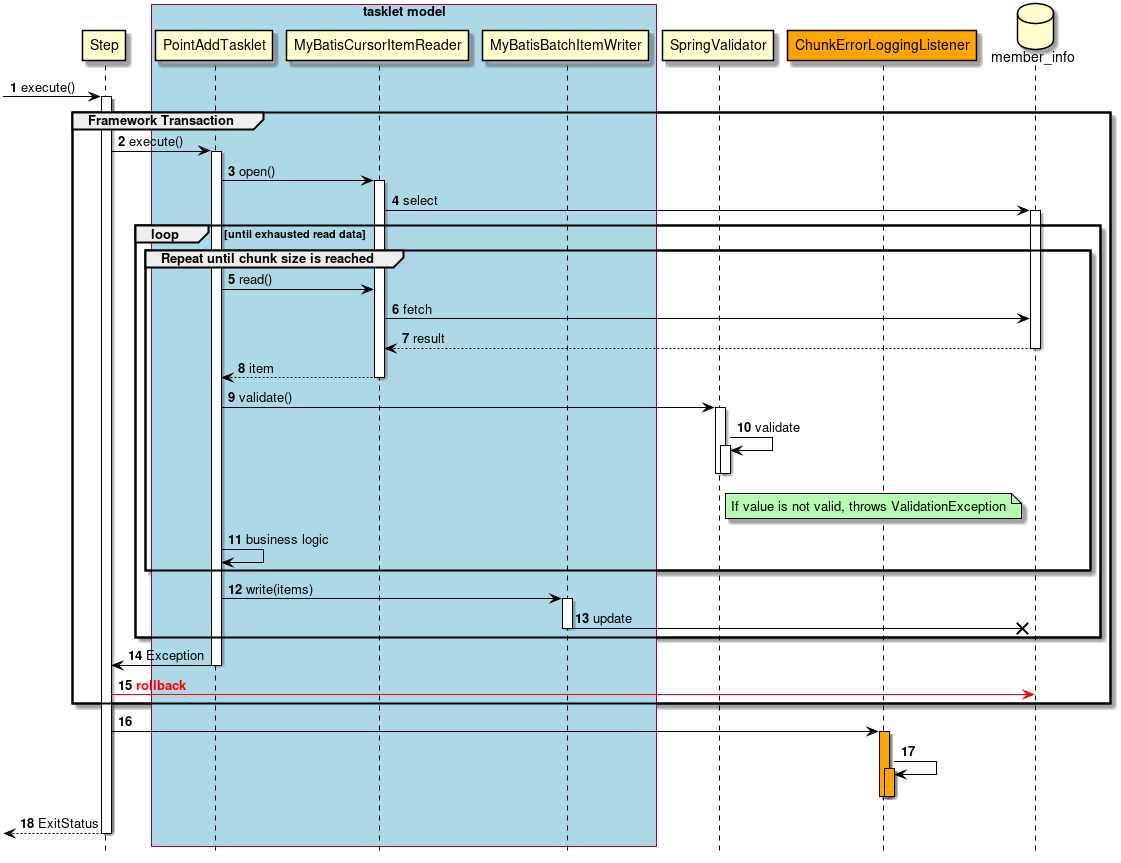

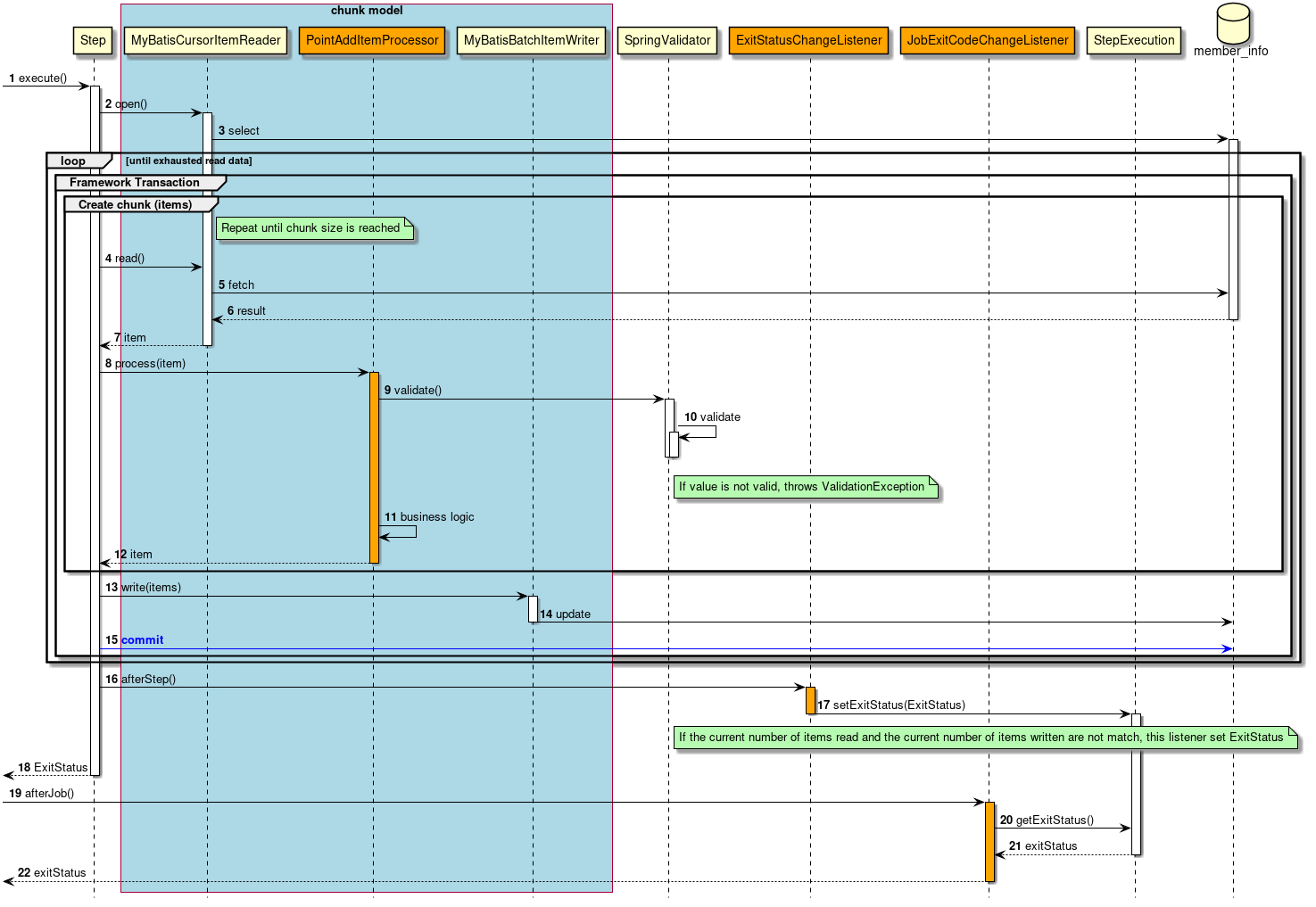

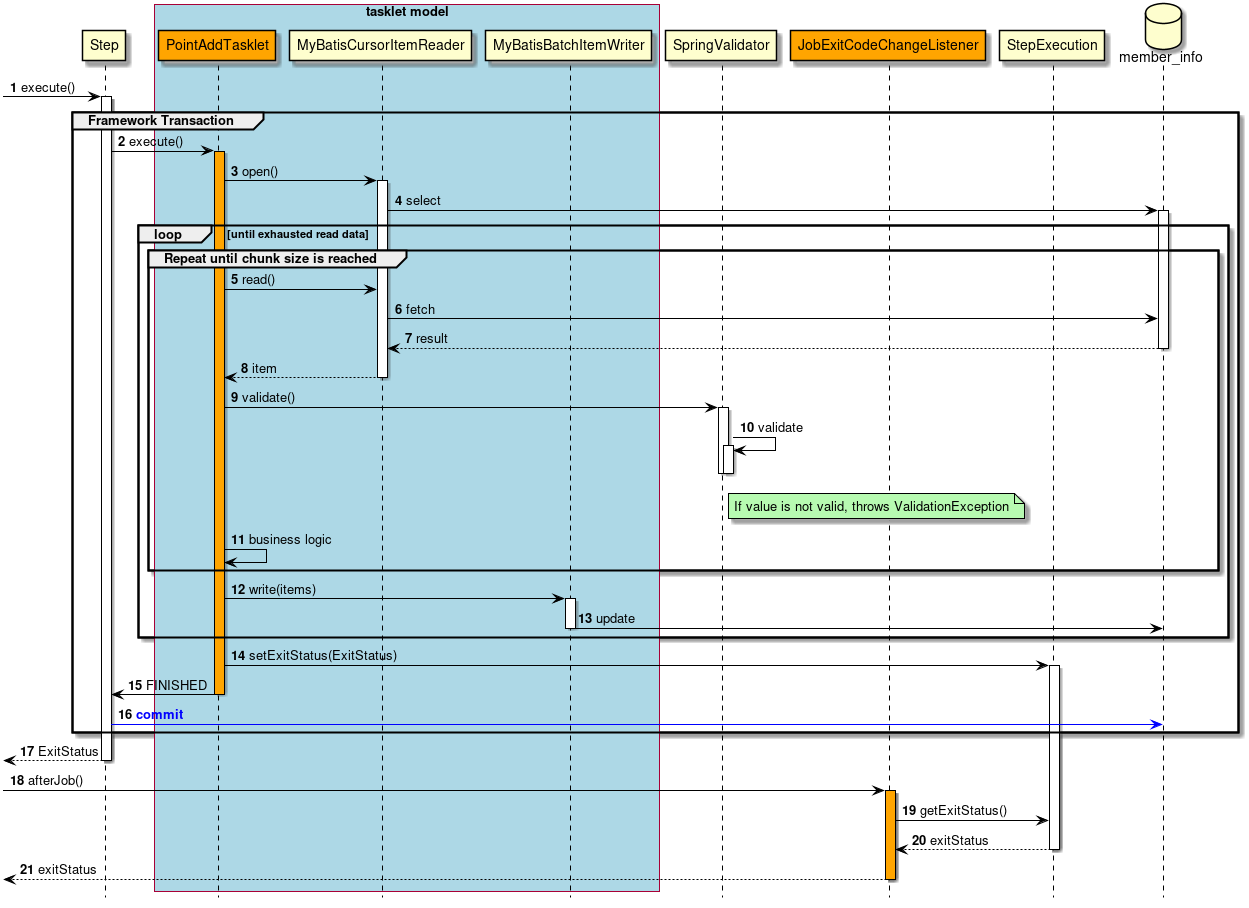

A sequence diagram wherein ChunkOrientedTasklet processes one chunk is shown below.

ChunkOrientedTasklet repeatedly executes ItemReader and ItemProcessor by the chunk size, in other words, reading and processing of data. After completing reading all the data of chunks, data writing process of ItemWriter is called only once and all the processed data in the chunks is passed. Data update processing is designed to be called once for chunks to enable easy organising like addBatch and executeBatch of JDBC.

Next, ItemReader, ItemProcessor and ItemWriter which are responsible for actual processing in chunk processing are introduced. Although it is assumed that the user handles his own implementation for each interface, it can also be covered by a generic concrete class provided by Spring Batch.

Especially, since ItemProcessor describes the business logic itself, the concrete classes are hardly provided by Spring Batch. ItemProcessor interface is implemented while describing the business logic. ItemProcessor is designed to allow types of objects used in I/O to be specified in respective type argument so that typesafe programming is enabled.

An implementation example of a simple ItemProcessor is shown below.

public class MyItemProcessor implements

ItemProcessor<MyInputObject, MyOutputObject> { // (1)

@Override

public MyOutputObject process(MyInputObject item) throws Exception { // (2)

MyOutputObject processedObject = new MyOutputObject(); // (3)

// Coding business logic for item of input data

return processedObject; // (4)

}

}| Sr. No. | Description |

|---|---|

(1) |

Implement ItemProcessor interface which specifies the types of objects used for input and output in respective type argument. |

(2) |

Implement |

(3) |

Create output object and store business logic results processed for the input data item. |

(4) |

Return output object. |

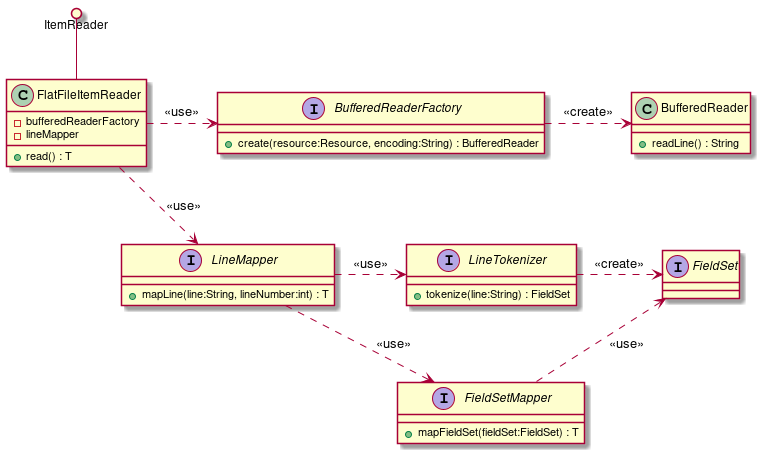

Various concrete classes are offered by Spring Batch for ItemReader or ItemWriter and these are used quite frequently. However, when a file of specific format is to be input or output, a concrete class which implements individual ItemReader or ItemWriter can be created and used.

For implementation of business logic while developing actual application, refer Application development flow.

Representative concrete classes of ItemReader, ItemProcessor and ItemWriter offered by Spring Batch are shown in the end.

| Interface | Concrete class name | Overview |

|---|---|---|

ItemReader |

FlatFileItemReader |

Read flat files (non-structural files) like CSV file. Mapping rules for delimiters and objects can be customised by using Resource object as input. |

StaxEventItemReader |

Read XML file. As the name implies, it is an implementation which reads a XML file based on StAX. |

|

JdbcPagingItemReader |

Execute SQL by using JDBC and read records on the database. When a large amount of data is to be processed on the database, it is necessary to avoid reading all the records on memory, and to read and discard only the data necessary for one processing. |

|

MyBatisCursorItemReader |

Read records on the database in coordination with MyBatis. Spring coordination library offered by MyBatis is provided by MyBatis-Spring. For the difference between Paging and Cursor, it is same as JdbcXXXItemReader except for using MyBatis for implementation. In addition, JpaPagingItemReader, HibernatePagingItemReader and HibernateCursor are provided which read records on the database by coordinating with ItemReaderJPA implementation or Hibernate. Using |

|

JmsItemReader |

Receive messages from JMS or AMQP and read the data contained within it. |

|

ItemProcessor |

PassThroughItemProcessor |

No operation is performed. It is used when processing and modification of input data is not required. |

ValidatingItemProcessor |

Performs input check. It is necessary to implement Spring Batch specific org.springframework.batch.item.validator.Validator for the implementation of input check rules. |

|

CompositeItemProcessor |

Sequentially execute multiple ItemProcessor for identical input data. It is enabled when business logic is to be executed after performing input check using ValidatingItemProcessor. |

|

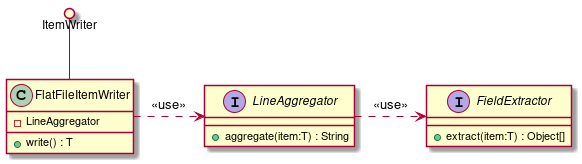

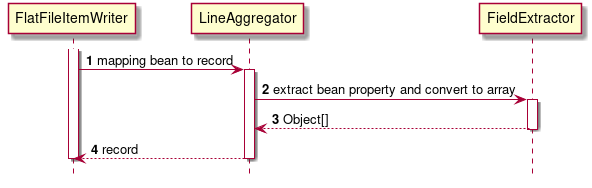

ItemWriter |

FlatFileItemWriter |

Write processed Java object as a flat file like CSV file. Mapping rules for file lines can be customised from delimiters and objects. |

StaxEventItemWriter |

Write processed Java object as a XML file. |

|

JdbcBatchItemWriter |

Execute SQL by using JDBC and output processed Java object to database. Internally JdbcTemplate is used. |

|

MyBatisBatchItemWriter |

Coordinate with MyBatis and output processed Java object to the database. It is provided by Spring coordination library MyBatis-Spring offered by MyBatis. |

|

JmsItemWriter |

Send a message of a processed Java object with JMS or AMQP. |

|

PassThroughItemProcessor omitted

When a job is defined in XML, ItemProcessor setting can be omitted. When it is omitted, input data is passed to ItemWriter without performing any operation similar to PassThroughItemProcessor. ItemProcessor omitted

|

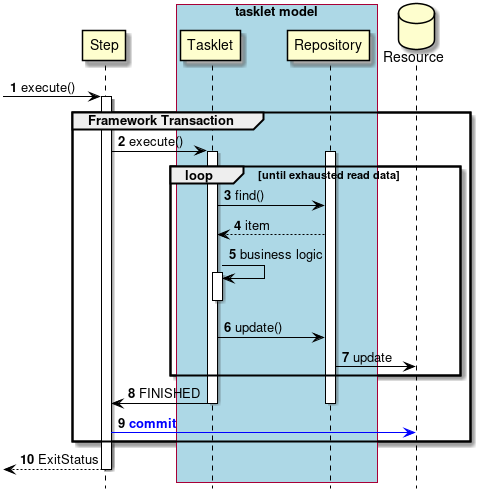

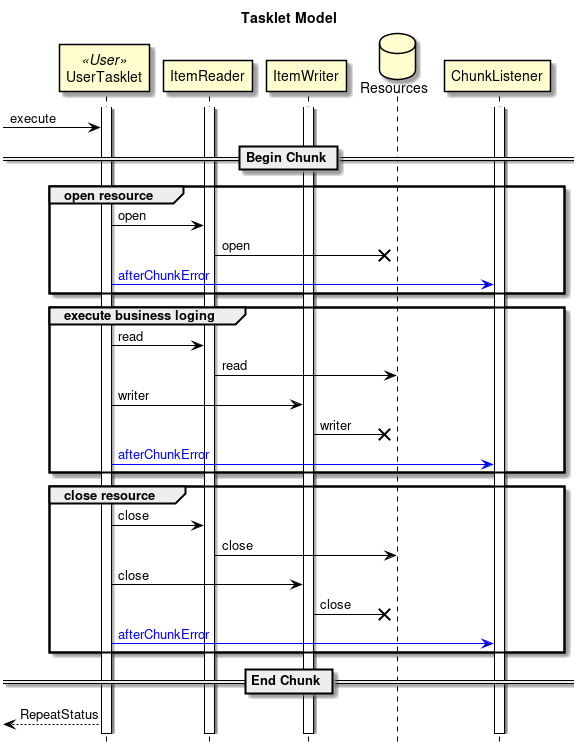

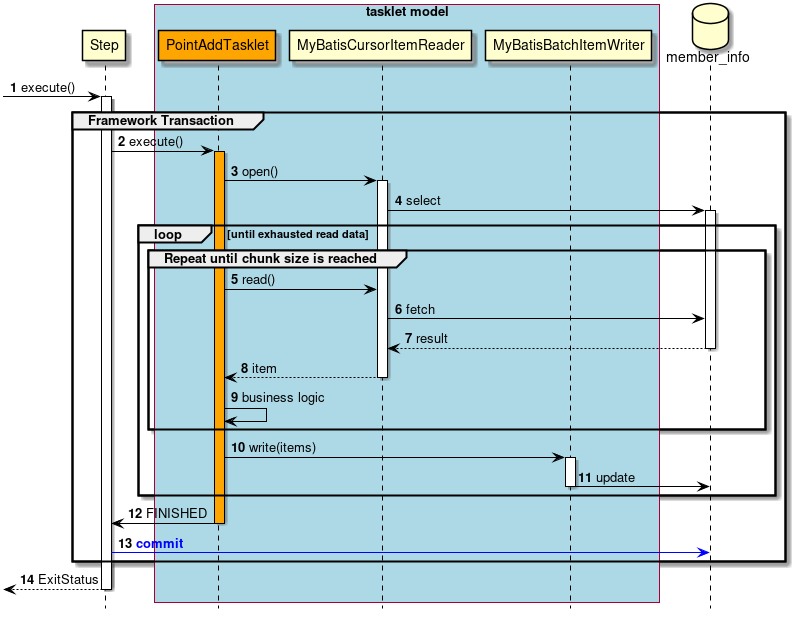

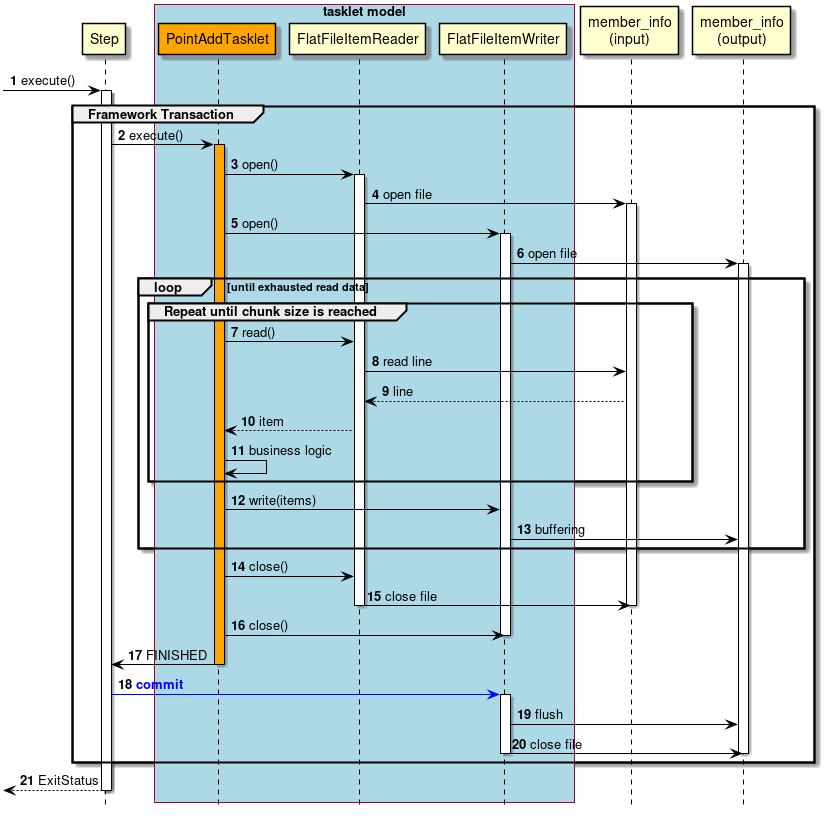

2.3.2.3.2. Tasket model

Chunk model is a framework suitable for batch applications that read multiple input data one by one and perform a series of processing. However, a process which does not fit with the type of chunk processing is also implemented. For example, when system command is to be executed, when only one record in control table is to be updated etc.

In such a case, merits of efficiency obtained by chunk processing are very less and demerits owing to difficult design and implementation are significant. Hence, it is rational to use tasket model.

It is necessary for the user to implement Tasket interface provided by Spring Batch while using a Tasket model. Further, following concrete class is provided in Spring Batch, subsequent description is not given in TERASOLUNA Batch 5.x.

| Class name | Overview |

|---|---|

SystemCommandTasklet |

Tasket to execute system commands asynchronously. Command to be specified in the command property is specified. |

MethodInvokingTaskletAdapter |

Tasket for executing specific methods of POJO class. Specify Bean of target class in targetObject property and name of the method to be executed in targetMethod property. |

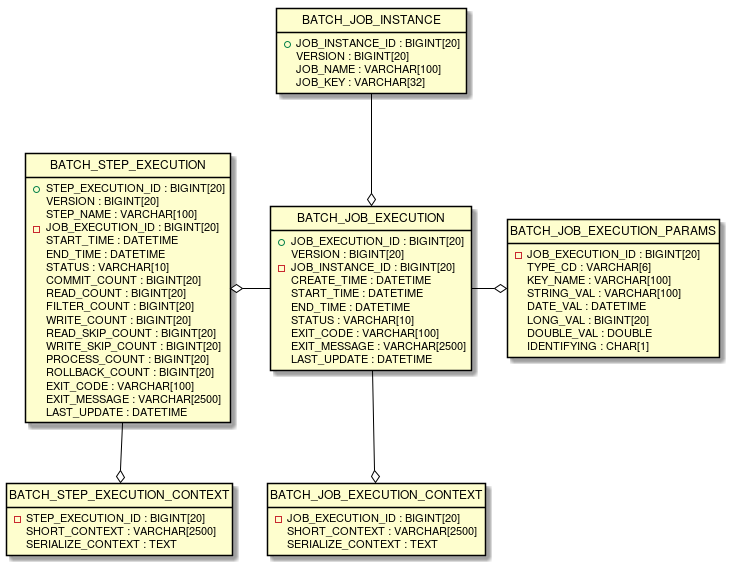

2.3.2.4. Metadata schema of JobRepository

Metadata schema of JobRepository is explained.

Note that, overall picture is explained including the contents explained in Spring Batch reference Appendix B. Meta-Data Schema

Spring Batch metadata table corresponds to a domain object (Entity object) which are represented by Java.

Table |

Entity object |

Overview |

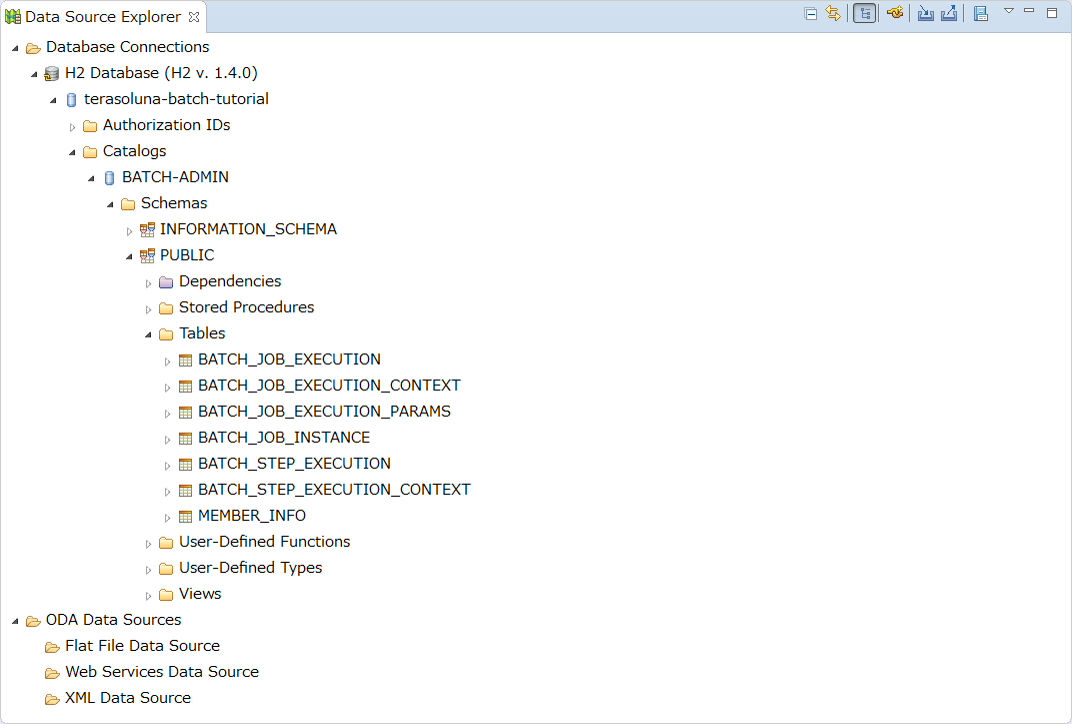

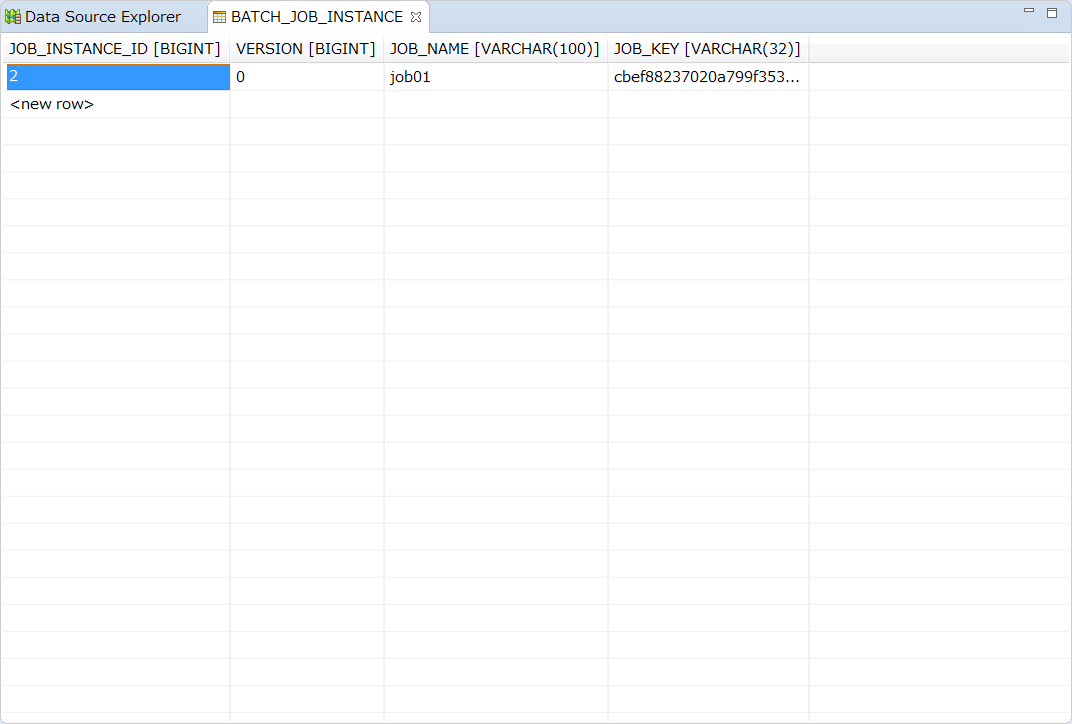

BATCH_JOB_INSTANCE |

JobInstance |

Retains the string which serialises job name and job parameter. |

BATCH_JOB_EXECUTION |

JobExecution |

Retains job status and execution results. |

BATCH_JOB_EXECUTION_PARAMS |

JobExecutionParams |

Retains job parameters assigned at the startup. |

BATCH_JOB_EXECUTION_CONTEXT |

JobExecutionContext |

Retains the context inside the job. |

BATCH_STEP_EXECUTION |

StepExecution |

Retains status and execution results of step, number of commits and rollbacks. |

BATCH_STEP_EXECUTION_CONTEXT |

StepExecutionContext |

Retains context inside the step. |

JobRepository is responsible for accurately storing the contents stored in each Java object, in the table.

|

Regarding the character string stored in the meta data table

Character string stored in the meta data table allows only a restricted number of characters and when this limit is exceeded, character string is truncated.

|

6 ERD models of all the tables and interrelations are shown below.

2.3.2.4.1. Version

Majority of database tables contain version columns. This column is important since Spring Batch adopts an optimistic locking strategy to handle updates to database. This record signifies that it is updated when the value of the version is incremented. When JobRepository updates the value and the version number is changed, an OptimisticLockingFailureException which indicates an occurrence of simultaneous access error is thrown. Other batch jobs may be running on different machines, however, all the jobs use the same database, hence this check is required.

2.3.2.4.2. ID (Sequence) definition

BATCH_JOB_INSTANCE, BATCH_JOB_EXECUTION and BATCH_STEP_EXECUTION all contain column ending with _ID.

These fields act as a primary key for respective tables.

However, these keys are not generated in the database but are rather generated in a separate sequence.

After inserting one of the domain objects in the database, the keys which assign the domain objects should be set in the actual objects so that they can be uniquely identified in Java.

Sequences may not be supported depending on the database. In this case, a table is used instead of each sequence.

2.3.2.4.3. Table definition

Explanation is given for each table item.

BATCH_JOB_INSTANCE table retains all the information related to JobInstance and is at top level of the overall hierarchy.

| Column name | Description |

|---|---|

JOB_INSTANCE_ID |

A primary key which is a unique ID identifying an instance. |

VERSION |

Refer Version. |

JOB_NAME |

Job name. A non-null value since it is necessary for identifying an instance. |

JOB_KEY |

JobParameters which are serialised for uniquely identifying same job as a different instance. |

BATCH_JOB_EXECUTION table retains all the information related to JobExecution object. When a job is executed, new rows are always registered in the table with new JobExecution.

| Column name | Description |

|---|---|

JOB_EXECUTION_ID |

Primary key that uniquely identifies this job execution. |

VERSION |

Refer Version. |

JOB_INSTANCE_ID |

Foreign key from BATCH_JOB_INSTANCE table which shows an instance wherein the job execution belongs. Multiple executions are likely to exist for each instance. |

CREATE_TIME |

Time when the job execution was created. |

START_TIME |

Time when the job execution was started. |

END_TIME |

Indicates the time when the job execution was terminated regardless of whether it was successful or failed. |

STATUS |

A character string which indicates job execution status. It is a character string output by BatchStatus enumeration object. |

EXIT_CODE |

A character string which indicates an exit code of job execution. When it is activated by CommandLineJobRunner, it can be converted to a numeric value. |

EXIT_MESSAGE |

A character string which explains job termination status in detail. When a failure occurs, a character string that includes as many as stack traces as possible is likely. |

LAST_UPDATED |

Time when job execution of the record was last updated. |

BATCH_JOB_EXECUTION_PARAMS table retains all the information related to JobParameters object. It contains a pair of 0 or more keys passed to the job and the value and records the parameters by which the job was executed.

| Column name | Description |

|---|---|

JOB_EXECUTION_ID |

Foreign key from BATCH_JOB_EXECUTION table which executes this job wherein the job parameter belongs. |

TYPE_CD |

A character string which indicates that the data type is string, date, long or double. |

KEY_NAME |

Parameter key. |

STRING_VAL |

Parameter value when data type is string. |

DATE_VAL |

Parameter value when data type is date. |

LONG_VAL |

Parameter value when data type is an integer. |

DOUBLE_VAL |

Parameter value when data type is a real number. |

IDENTIFYING |

A flag which indicates that the parameter is a value to identify that the job instance is unique. |

BATCH_JOB_EXECUTION_CONTEXT table retains all the information related to ExecutionContext of Job. It contains all the job level data required for execution of specific jobs. The data indicates the status that must be fetched when the process is to be executed again after a job failure and enables the failed job to start from the point where processing has stopped.

| Column name | Description |

|---|---|

JOB_EXECUTION_ID |

A foreign key from BATCH_JOB_EXECUTION table which indicates job execution wherein ExecutionContext of Job belongs. |

SHORT_CONTEXT |

A string representation of SERIALIZED_CONTEXT. |

SERIALIZED_CONTEXT |

Overall serialised context. |

BATCH_STEP_EXECUTION table retains all the information related to StepExecution object. This table is very similar to BATCH_JOB_EXECUTION table in many ways. When each JobExecution is created, at least one entry exists for each Step.

| Column name | Description |

|---|---|

STEP_EXECUTION_ID |

Primary key that uniquely identifies the step execution. |

VERSION |

Refer Version. |

STEP_NAME |

Step name. |

JOB_EXECUTION_ID |

Foreign key from BATCH_JOB_EXECUTION table which indicates JobExecution wherein StepExecution belongs |

START_TIME |

Time when step execution was started. |

END_TIME |

Indicates time when step execution ends regardless of whether it is successful or failed. |

STATUS |

A character string that represents status of step execution. It is a string which outputs BatchStatus enumeration object. |

COMMIT_COUNT |

Number of times a transaction is committed. |

READ_COUNT |

Data records read by ItemReader. |

FILTER_COUNT |

Data records filtered by ItemProcessor. |

WRITE_COUNT |

Data records written by ItemWriter. |

READ_SKIP_COUNT |

Data records skipped by ItemReader. |

WRITE_SKIP_COUNT |

Data records skipped by ItemWriter. |

PROCESS_SKIP_COUNT |

Data records skipped by ItemProcessor. |

ROLLBACK_COUNT |

Number of times a transaction is rolled back. |

EXIT_CODE |

A character string which indicates exit code for step execution. When it is activated by using CommandLineJobRunner, it can be changed to a numeric value. |

EXIT_MESSAGE |

A character string which explains step termination status in detail. When a failure occurs, a character string that includes as many as stack traces as possible is likely. |

LAST_UPDATED |

Time when the step execution of the record was last updated. |

BATCH_STEP_EXECUTION_CONTEXT table retains all the information related to ExecutionContext of Step. It contains all the step level data required for execution of specific steps. The data indicates the status that must be fetched when the process is to be executed again after a job failure and enables the failed job to start from the point where processing has stopped.

| Column name | Description |

|---|---|

STEP_EXECUTION_ID |

Foreign key from BATCH_STEP_EXECUTION table which indicates job execution wherein ExecutionContext of Step belongs. |

SHORT_CONTEXT |

String representation of SERIALIZED_CONTEXT. |

SERIALIZED_CONTEXT |

Overall serialized context. |

2.3.2.4.4. DDL script

JAR file of Spring Batch Core contains a sample script which creates a relational table corresponding to several database platforms.

These scripts can be used as it is or additional index or constraints can be changed as required.

The script is included in the package of org.springframework.batch.core and the file name is configured by schema-*.sql.

"*" is the short name for Target Database Platform..

2.3.2.5. Typical performance tuning points

Typical performance tuning points in Spring Batch are explained.

- Adjustment of chunk size

-

Chunk size is increased to reduce overhead occurring due to resource output.

However, if chunk size is too large, it increases load on the resources resulting in deterioration in the performance. Hence, chunk size must be adjusted to a moderate value. - Adjustment of fetch size

-

Fetch size (buffer size) for the resource is increased to reduce overhead occurring due to input from resources.

- Reading of a file efficiently

-

When BeanWrapperFieldSetMapper is used, a record can be mapped to the Bean only by sequentially specifying Bean class and property name. However, it takes time to perform complex operations internally. Processing time can be reduced by using dedicated FieldSetMapper interface implementation which performs mapping.

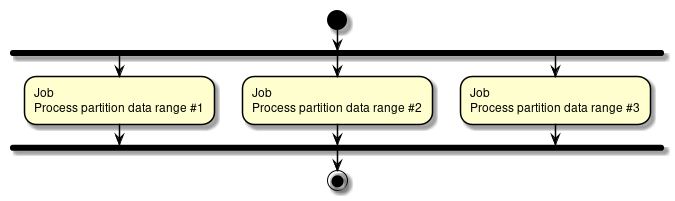

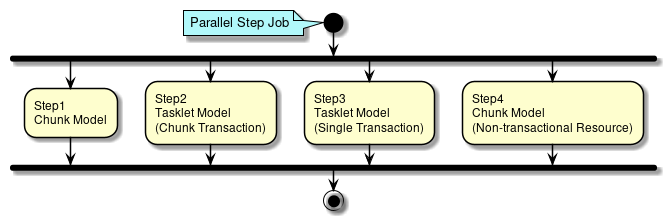

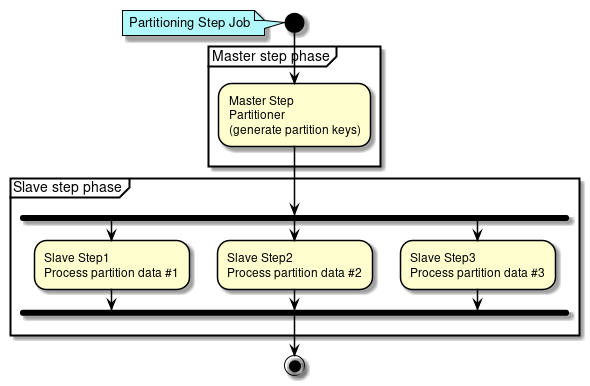

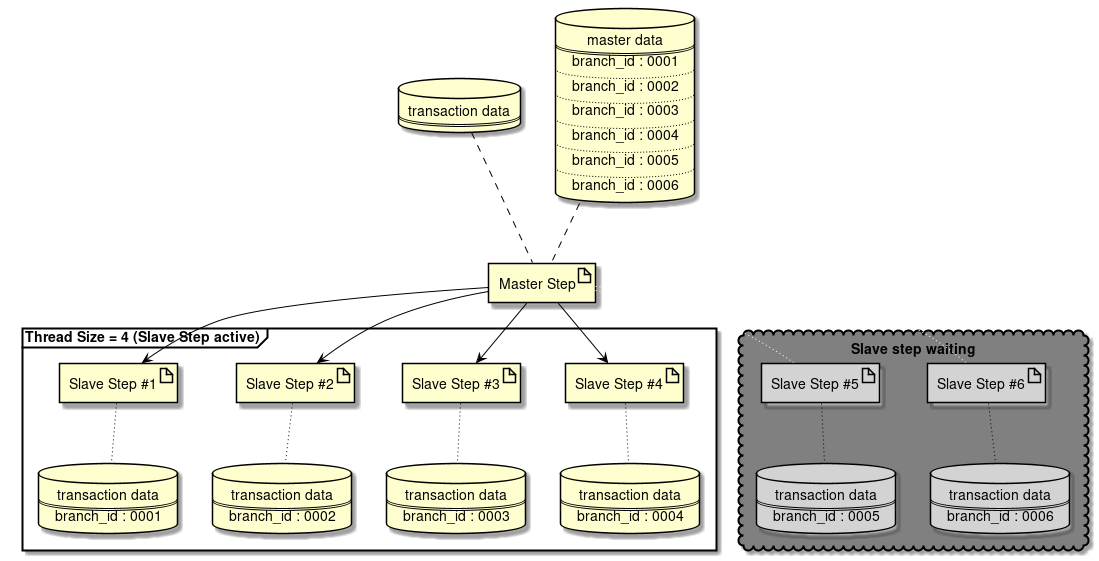

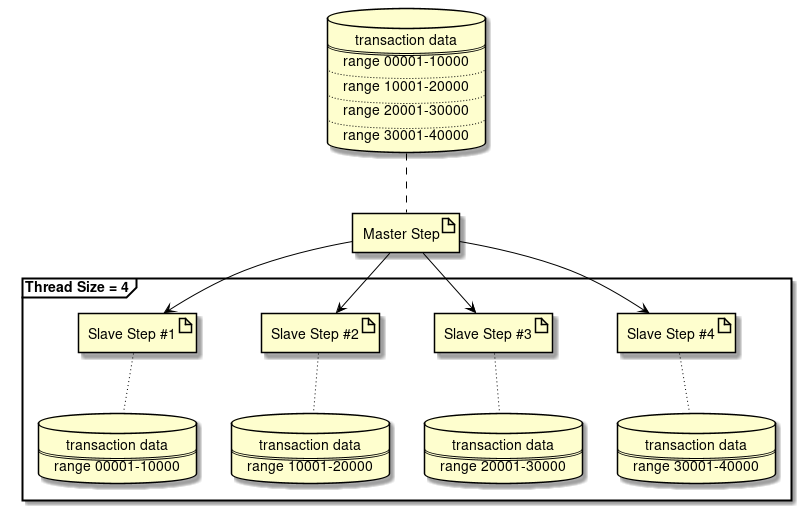

For file I/O details, refer "File access". - Parallel processing, Multiple processing

-

Spring Batch supports parallel processing of Step execution and multiple processing by using data distribution. Parallel processing or multiple processing can be performed and the performance can be improved by running the processes in parallel. However, if number of parallel processes and multiple processes is too large, load on the resources increases resulting in deterioration of performance. Hence, size must be adjusted to a moderate value.

For details of parallel and multiple processing, refer parallel processing and multiple processing. - Reviewing distributed processing

-

Spring Batch also supports distributed processing across multiple machines. Guidelines are same as parallel and multiple processing.

Distributed processing will not be explained in this guideline since the basic design and operational design are complex.

2.4. Architecture of TERASOLUNA Batch Framework for Java (5.x)

2.4.1. Overview

Overall architecture of TERASOLUNA Batch Framework for Java (5.x) is explained.

In TERASOLUNA Batch Framework for Java (5.x), as described in "General batch processing system", it is implemented by using OSS combination focused on Spring Batch.

The configuration schematic diagram of TERASOLUNA Batch Framework for Java (5.x) including hierarchy architecture of Spring Batch is shown below.

- Business Application

-

All job definitions and business logic written by developers.

- spring batch core

-

A core runtime class required to start and control batch jobs offered by Spring Batch.

- spring batch infrastructure

-

Implementation of general ItemReader/ItemProcessor/ItemWriter offered by Spring Batch which are used by developers and core framework itself.

2.4.2. Structural elements of job

The configuration schematic diagram of jobs is shown below in order to explain structural elements of the job.

This section also explains about guidelines which should be finely configured for job and step.

2.4.2.1. Job

A job is an entity that encapsulates entire batch process and is a container for storing steps.

A job can consist of one or more steps.

A job is defined in the Bean definition file by using XML. Multiple jobs can be defined in the job definition file, however, managing jobs tend to become complex.

Hence, TERASOLUNA Batch Framework for Java (5.x) uses following guidelines.

1 job = 1 job definition file

2.4.2.2. Step

Step defines information required for controlling a batch process. A chunk model and a tasklet model can be defined in the step.

- Chunk model

-

-

It is configured by ItemReader, ItemProcessor and ItemWriter.

-

- Tasklet model

-

-

It is configured only by Tasklet.

-

As given in "Rules and precautions to be considered in batch processing", it is necessary to simplify as much as possible and avoid complex logical structures in a single batch process.

Hence, TERASOLUNA Batch Framework for Java (5.x) uses following guidelines.

1 step = 1 batch process = 1 business logic

|

Distribution of business logic in chunk model

If a single business logic is complex and large-scale, the business logic is divided into units. As clear from the schematic diagram, since only one ItemProcessor can be set in 1 step, it looks like the division of business logic is not possible. However, CompositeItemProcessor is an ItemProcessor that consists of multiple ItemProcessors, and the business logic can be divided and executed by using this implementation. |

2.4.3. How to implement Step

2.4.3.1. Chunk model

Definition of chunk model and purpose of use are explained.

- Definition

-

ItemReader, ItemProcessor and ItemWriter implementation and number of chunks are set in ChunkOrientedTasklet. Respective roles are explained.

-

ChunkOrientedTasklet…Call ItemReader/ItemProcessor and create a chunk. Pass created chunk to ItemWriter.

-

ItemReader…Read input data.

-

ItemProcessor…Process read data.

-

ItemWriter…Output processed data in chunk units.

-

-

For overview of chunk model, refer "Chunk model".

<batch:job id="exampleJob">

<batch:step id="exampleStep">

<batch:tasklet>

<batch:chunk reader="reader"

processor="processor"

writer="writer"

commit-interval="100" />

</batch:tasklet>

</batch:step>

</batch:job>- Purpose of use

-

Since it handles a certain amount of data collectively, it is used while handling a large amount of data.

2.4.3.2. Tasklet model

Definition of tasklet model and purpose of use are explained.

- Definition

-

Only Tasklet implementation is set.

For overview of Tasklet model, refer "Tasklet model".

<batch:job id="exampleJob">

<batch:step id="exampleStep">

<batch:tasklet ref="myTasklet">

</batch:step>

</batch:job>- Purpose of use

-

It can be used for executing a process which is not associated with I/O like execution of system commands etc.

Further, it can also be used while committing the data in batches.

2.4.3.3. Functional difference between chunk model and Tasklet model

Explanation is given for the functional difference between chunk model and Tasklet model. Here, only outline is given. Refer to the section of each function for details.

| Function | Chunk model | Tasklet model |

|---|---|---|

Structural elements |

Configured by ItemReader/ItemProcessor/ItemWriter/ChunkOrientedTasklet. |

Configured only by Tasklet. |

Transaction |

A transaction is generated in a chunk unit. |

Processed in 1 transaction. |

Recommended reprocessing method |

Re-run and re-start can be used. |

As a rule, only re-run is used. |

Exception handling |

Handling process becomes easier by using a listener. Individual implementation is also possible. |

Individual implementation is required. |

2.4.4. Running a job method

Running a job method is explained. This contains following.

Respective methods are explained.

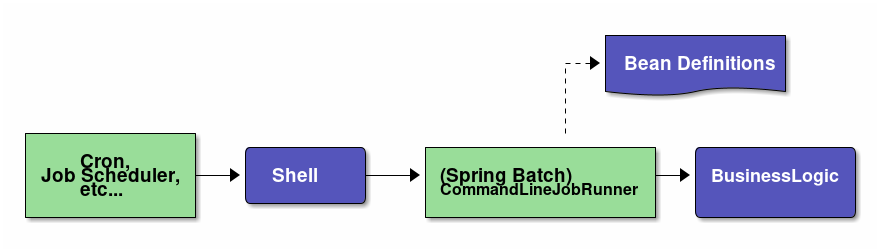

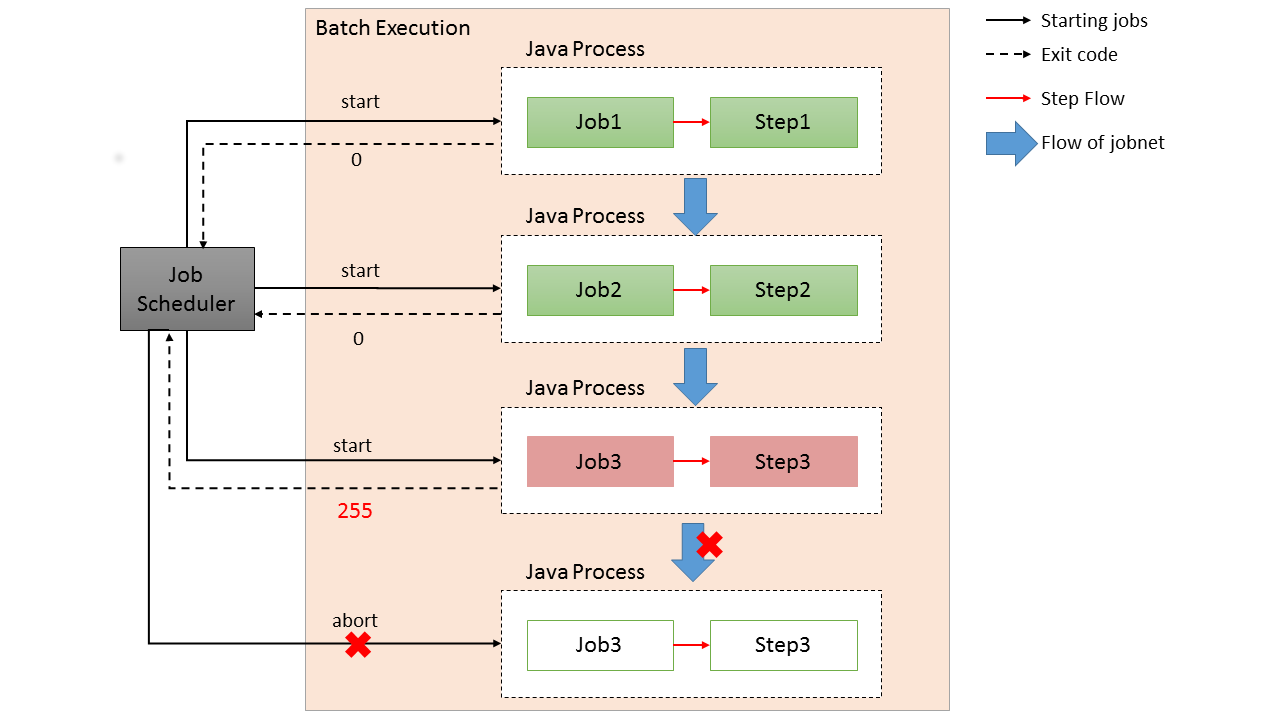

2.4.4.1. Synchronous execution method

Synchronous execution method is an execution method wherein the control is not given back to the boot source from job start to job completion.

A schematic diagram which starts a job from job scheduler is shown.

-

Start a shell script to run a job from job scheduler.

Job scheduler waits until the exit code (numeric value) is returned. -

Start

CommandLineJobRunnerto run a job from shell script.

Shell script waits untilCommandLineJobRunnerreturns an exit code (numeric value). -

CommandLineJobRunnerruns a job. Job returns an exit code (string) toCommandLineJobRunnerafter processing is completed.

CommandLineJobRunnerconverts exit code (string) returned from the job to exit code (numeric value) and returns it to the shell script.

2.4.4.2. Asynchronous execution method

Asynchronous execution method is an execution method wherein the control is given back to boot source immediately after running a job, by executing a job on a different execution base than boot source (a separate thread etc). In this method, it is necessary to fetch job execution results by a means different from that of running a job.

Following 2 methods are explained in TERASOLUNA Batch Framework for Java (5.x).

|

Other asynchronous execution methods

Asynchronous execution can also be performed by using messages like MQ, however since the job execution points are identical, description will be omitted in TERASOLUNA Batch Framework for Java (5.x). |

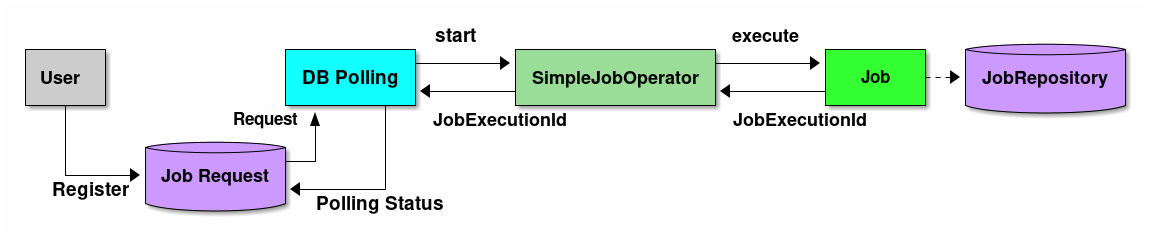

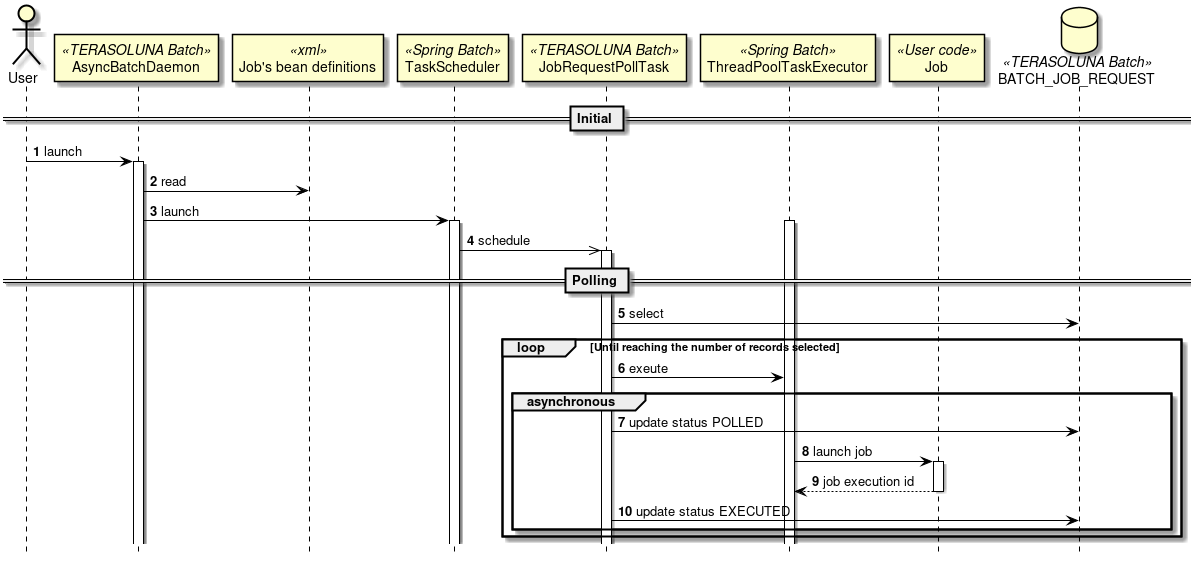

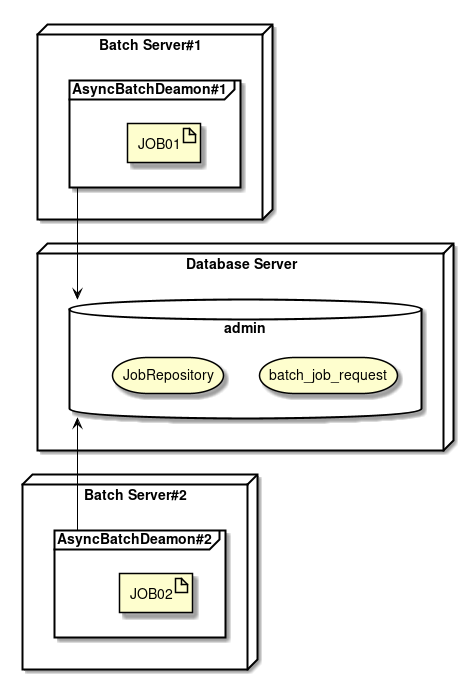

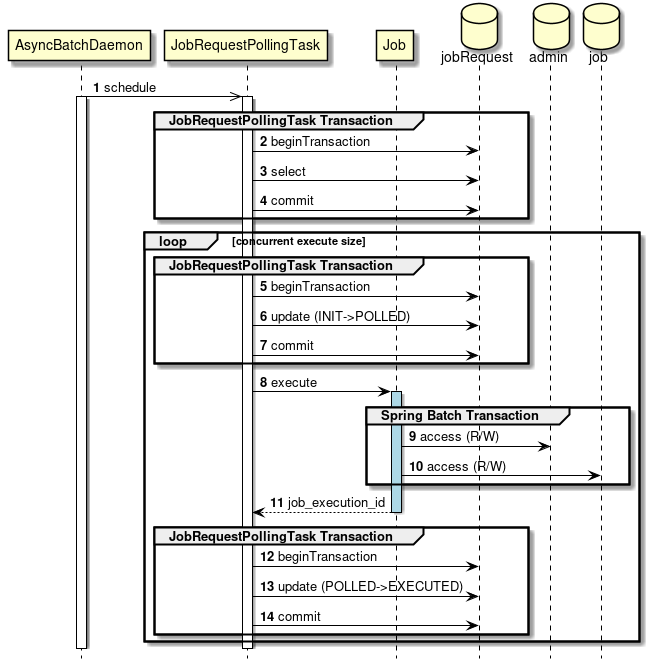

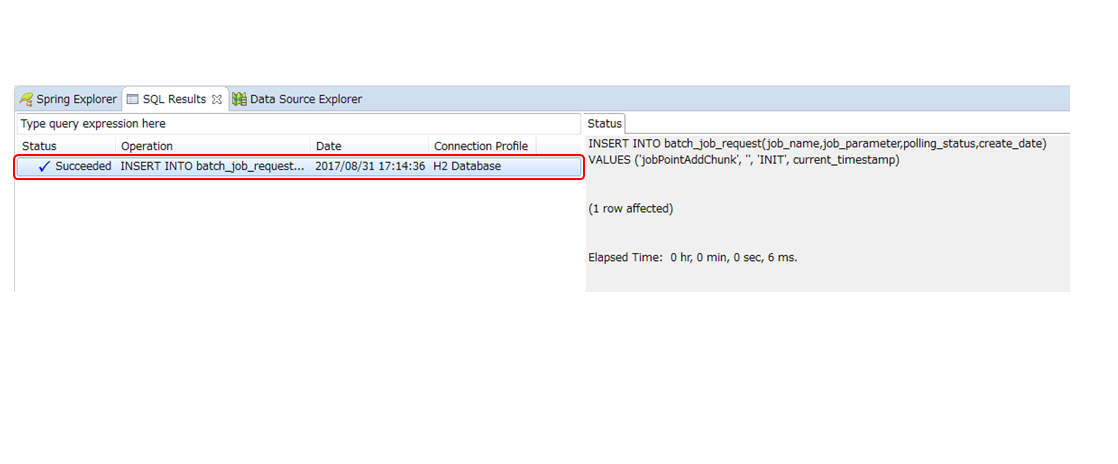

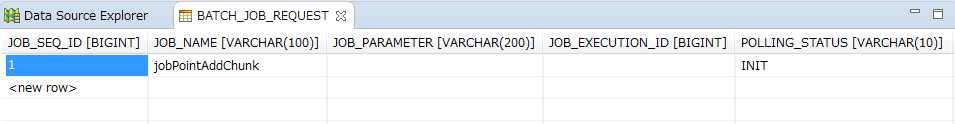

2.4.4.2.1. Asynchronous execution method (DB polling)

"Asynchronous execution (DB polling)" is a method wherein a job execution request is registered in the database, polling of the request is done and job is executed.

TERASOLUNA Batch Framework for Java (5.x) supports DB polling function. The schematic diagram of start by DB polling offered is shown.

-

User registers a job request to the database.

-

DB polling function periodically monitors the registration of the job request and executes the corresponding job when the registration is detected.

-

Run the job from SimpleJobOperator and receive

JobExecutionIdafter completion of the job. -

JobExecutionId is an ID which uniquely identifies job execution and execution results are browsed from JobRepository by using this ID.

-

Job execution results are registered in JobRepository by using Spring Batch system.

-

DB polling is itself executed asynchronously.

-

-

DB polling function updates JobExecutionId returned from SimpleJobOperator and the job request that started the status.

-

Job process progress and results are referred separately by using JobExecutionId.

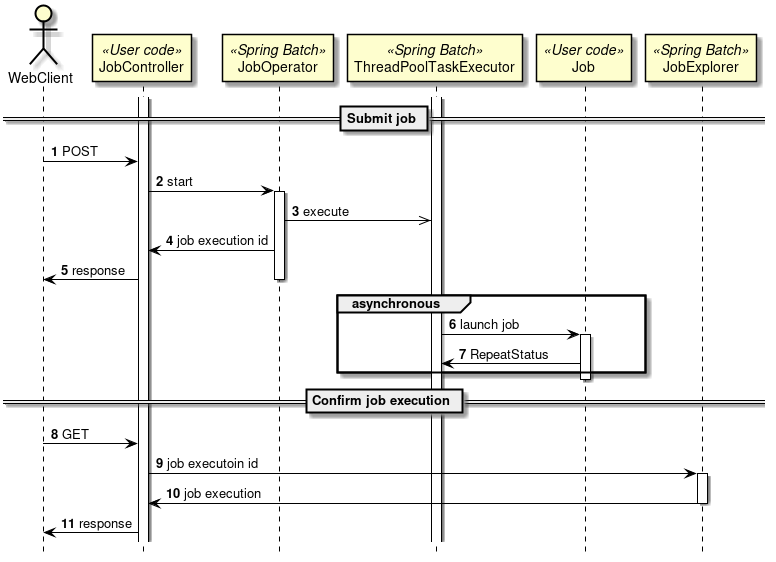

2.4.4.2.2. Asynchronous execution method (Web container)

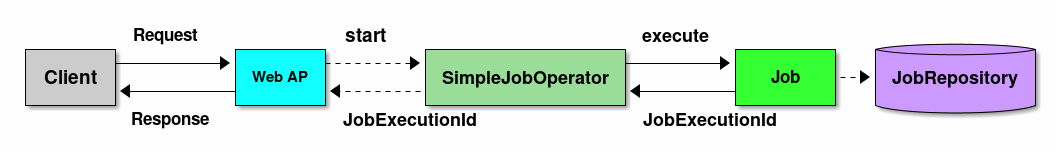

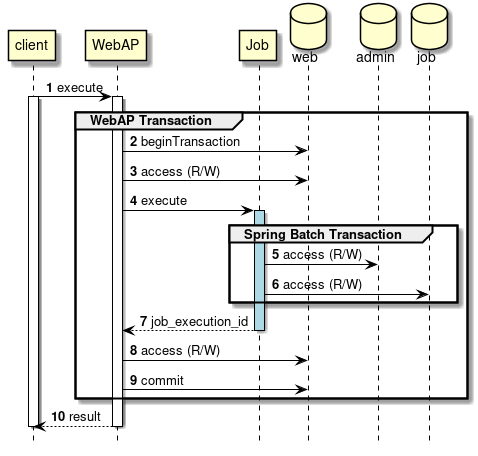

"Asynchronous execution (Web container)" is a method wherein a job is executed asynchronously using the request sent to web application on the web container as a trigger.* A Web application can return a response immediately after starting without waiting for the job to end.

-

Send a request from a client to Web application.

-

Web application asynchronously executes the job requested from a request.

-

Receive

`JobExecutionIdimmediately after starting a job from SimpleJobOperator. -

Job execution results are registered in JobRepository by using Spring Batch system.

-

-

Web application returns a response to the client without waiting for the job to end.

-

Job process progress and results are browsed separately by using JobExecutionId.

Further, it can also be linked with Web application configured by TERASOLUNA Server Framework for Java (5.x).

2.4.5. Points to be considered while using

Points to be considered while using TERASOLUNA Batch Framework for Java (5.x) are shown.

- Running a job method

-

- Synchronous execution method

-

It is used when job is run as per schedule and batch processing is carried out by combining multiple jobs.

- Asynchronous execution method (DB polling)

-

It is used in delayed processing, continuous execution of jobs with a short processing time, aggregation of large quantity of jobs.

- Asynchronous execution method (Web container)

-

Similar to DB polling, however it is used when an immediate action is required for the startup.

- Implementation method

-

- Chunk model

-

It is used when a large quantity of data is to be processed efficiently.

- Tasklet model

-

It is used for simple processing, processing that is difficult to standardize and for the processes wherein data is to be processed collectively.

3. Methodology of application development

3.1. Development of batch application

The development of batch application is explained in the following flow.

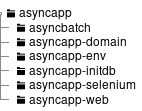

3.1.1. What is blank project

Blank project is the template of development project wherein various settings are made in advance such as Spring Batch, MyBatis3

and it is the starting point of application development.

In this guideline, a blank project with a single project structure is provided.

Refer to Project structure for the explanation of structure.

|

Difference from TERASOLUNA Server 5.x

Multi-project structure is recommended forTERASOLUNA Server 5.x. The reason is mainly to enjoy the following merits.

However, in this guideline, a single project structure is provided unlike TERASOLUNA Server 5.x. This point should be considered for batch application also, however,

by providing single project structure, accessing the resources related to one job is given priority. |

3.1.2. Creation of project

How to create a project using archetype:generate of Maven Archetype Plugin is explained.

|

Regarding prerequisites of creating environment

Prerequisites are explained below.

|

Execute the following commands in the directory where project is created.

C:\xxx> mvn archetype:generate ^

-DarchetypeGroupId=org.terasoluna.batch ^

-DarchetypeArtifactId=terasoluna-batch-archetype ^

-DarchetypeVersion=5.1.1.RELEASE$ mvn archetype:generate \

-DarchetypeGroupId=org.terasoluna.batch \

-DarchetypeArtifactId=terasoluna-batch-archetype \

-DarchetypeVersion=5.1.1.RELEASENext, set the following to Interactive mode in accordance with the status of the user.

-

groupId

-

artifactId

-

version

-

package

An example of setting and executing the value is shown below.

| Item name | Setting example |

|---|---|

groupId |

com.example.batch |

artifactId |

batch |

version |

1.0.0-SNAPSHOT |

package |

com.example.batch |

C:\xxx>mvn archetype:generate ^

More? -DarchetypeGroupId=org.terasoluna.batch ^

More? -DarchetypeArtifactId=terasoluna-batch-archetype ^

More? -DarchetypeVersion=5.1.1.RELEASE

[INFO] Scanning for projects…

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Maven Stub Project (No POM) 1

[INFO] ------------------------------------------------------------------------

(.. omitted)

Define value for property 'groupId': com.example.batch

Define value for property 'artifactId': batch

Define value for property 'version' 1.0-SNAPSHOT: : 1.0.0-SNAPSHOT

Define value for property 'package' com.example.batch: :

Confirm properties configuration:

groupId: com.example.batch

artifactId: batch

version: 1.0.0-SNAPSHOT

package: com.example.batch

Y: : y

[INFO] ------------------------------------------------------------------------

[INFO] Using following parameters for creating project from Archetype: terasolua-batch-archetype:5.1.1.RELEASE

[INFO] ------------------------------------------------------------------------

[INFO] Parameter: groupId, Value: com.example.batch

[INFO] Parameter: artifactId, Value: batch

[INFO] Parameter: version, Value: 1.0.0-SNAPSHOT

[INFO] Parameter: package, Value: com.example.batch

[INFO] Parameter: packageInPathFormat, Value: com/example/batch

[INFO] Parameter: package, Value: com.example.batch

[INFO] Parameter: version, Value: 1.0.0-SNAPSHOT

[INFO] Parameter: groupId, Value: com.example.batch

[INFO] Parameter: artifactId, Value: batch

[INFO] Project created from Archetype in dir: C:\xxx\batch

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 36.952 s

[INFO] Finished at: 2017-07-25T14:23:42+09:00

[INFO] Final Memory: 14M/129M

[INFO] ------------------------------------------------------------------------$ mvn archetype:generate \

> -DarchetypeGroupId=org.terasoluna.batch \

> -DarchetypeArtifactId=terasoluna-batch-archetype \

> -DarchetypeVersion=5.1.1.RELEASE

[INFO] Scanning for projects…

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building Maven Stub Project (No POM) 1

[INFO] ------------------------------------------------------------------------

(.. omitted)

Define value for property 'groupId': com.example.batch

Define value for property 'artifactId': batch

Define value for property 'version' 1.0-SNAPSHOT: : 1.0.0-SNAPSHOT

Define value for property 'package' com.example.batch: :

Confirm properties configuration:

groupId: com.example.batch

artifactId: batch

version: 1.0.0-SNAPSHOT

package: com.example.batch

Y: : y

[INFO] ----------------------------------------------------------------------------

[INFO] Using following parameters for creating project from Archetype: terasoluna-batch-archetype:5.1.1.RELEASE

[INFO] ----------------------------------------------------------------------------

[INFO] Parameter: groupId, Value: com.example.batch

[INFO] Parameter: artifactId, Value: batch

[INFO] Parameter: version, Value: 1.0.0-SNAPSHOT

[INFO] Parameter: package, Value: com.example.batch

[INFO] Parameter: packageInPathFormat, Value: com/example/batch

[INFO] Parameter: package, Value: com.example.batch

[INFO] Parameter: version, Value: 1.0.0-SNAPSHOT

[INFO] Parameter: groupId, Value: com.example.batch

[INFO] Parameter: artifactId, Value: batch

[INFO] Project created from Archetype in dir: C:\xxx\batch

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 01:19 min

[INFO] Finished at: 2017-07-25T14:20:09+09:00

[INFO] Final Memory: 17M/201M

[INFO] ------------------------------------------------------------------------The creation of project is completed by the above execution.

It can be confirmed whether the project was created properly by the following points.

C:\xxx>mvn clean dependency:copy-dependencies -DoutputDirectory=lib package

C:\xxx>java -cp "lib/*;target/*" ^

org.springframework.batch.core.launch.support.CommandLineJobRunner ^

META-INF/jobs/job01.xml job01$ mvn clean dependency:copy-dependencies -DoutputDirectory=lib package

$ java -cp 'lib/*:target/*' \

org.springframework.batch.core.launch.support.CommandLineJobRunner \

META-INF/jobs/job01.xml job01It is created properly if the following output is obtained.

C:\xxx>mvn clean dependency:copy-dependencies -DoutputDirectory=lib package

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building TERASOLUNA Batch Framework for Java (5.x) Blank Project 1.0.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

(.. omitted)

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 11.007 s

[INFO] Finished at: 2017-07-25T14:24:36+09:00

[INFO] Final Memory: 23M/165M

[INFO] ------------------------------------------------------------------------

C:\xxx>java -cp "lib/*;target/*" ^

More? org.springframework.batch.core.launch.support.CommandLineJobRunner ^

More? META-INF/jobs/job01.xml job01

(.. omitted)

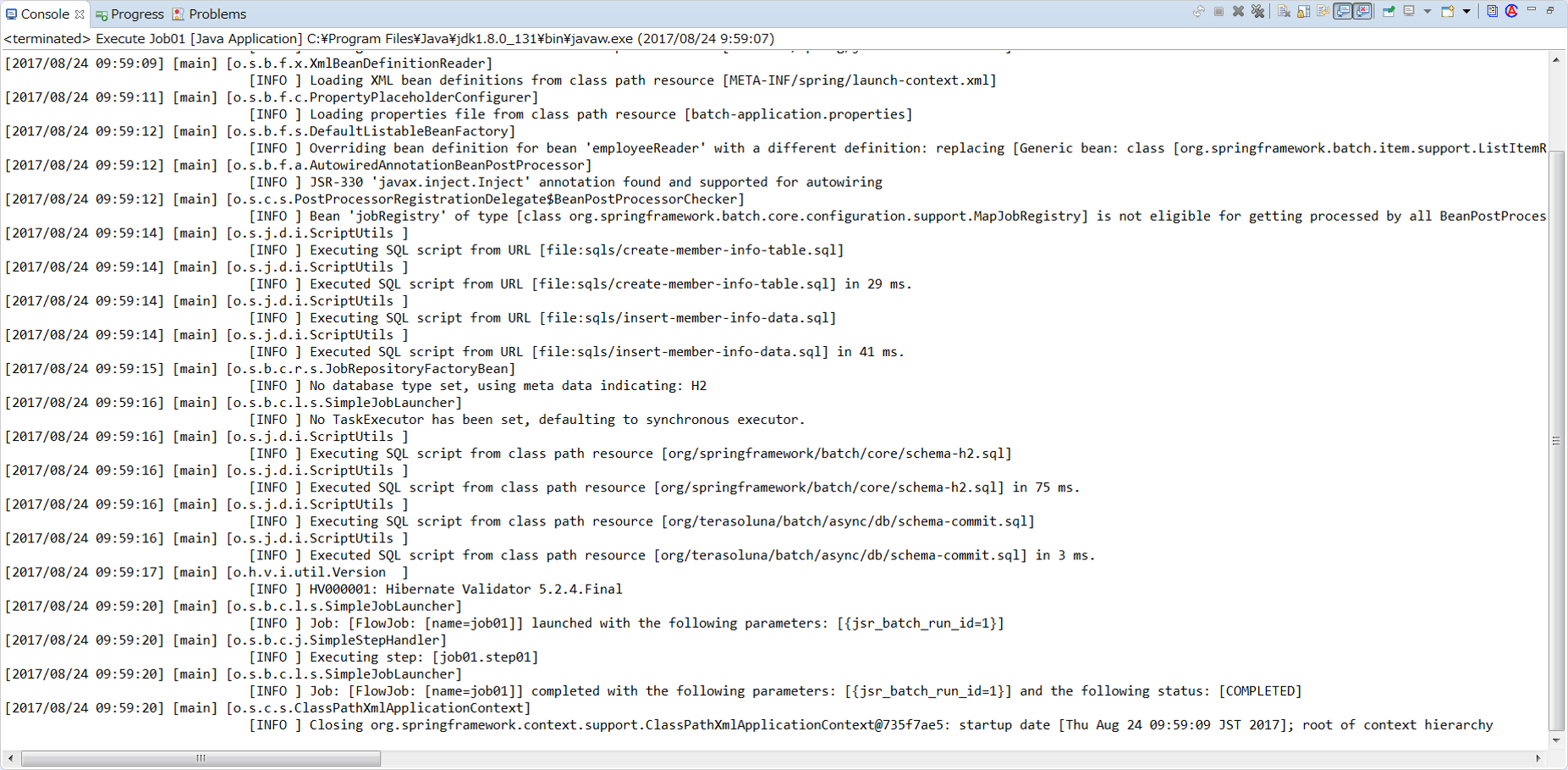

[2017/07/25 14:25:22] [main] [o.s.b.c.l.s.SimpleJobLauncher] [INFO ] Job: [FlowJob: [name=job01]] launched with the following parameters: [{jsr_batch_run_id=1}]

[2017/07/25 14:25:22] [main] [o.s.b.c.j.SimpleStepHandler] [INFO ] Executing step: [job01.step01]

[2017/07/25 14:25:23] [main] [o.s.b.c.l.s.SimpleJobLauncher] [INFO ] Job: [FlowJob: [name=job01]] completed with the following parameters: [{jsr_batch_run_id=1}] and the following status: [COMPLETED]

[2017/07/25 14:25:23] [main] [o.s.c.s.ClassPathXmlApplicationContext] [INFO ] Closing org.springframework.context.support.ClassPathXmlApplicationContext@62043840: startup date [Tue Jul 25 14:25:20 JST 2017]; root of context hierarchy$ mvn clean dependency:copy-dependencies -DoutputDirectory=lib package

[INFO] Scanning for projects...

[INFO]

[INFO] ------------------------------------------------------------------------

[INFO] Building TERASOLUNA Batch Framework for Java (5.x) Blank Project 1.0.0-SNAPSHOT

[INFO] ------------------------------------------------------------------------

(.. omitted)

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 10.827 s

[INFO] Finished at: 2017-07-25T14:21:19+09:00

[INFO] Final Memory: 27M/276M

[INFO] ------------------------------------------------------------------------

$ java -cp 'lib/*:target/*' \

> org.springframework.batch.core.launch.support.CommandLineJobRunner \

> META-INF/jobs/job01.xml job01

[2017/07/25 14:21:49] [main] [o.s.c.s.ClassPathXmlApplicationContext] [INFO ] Refreshing org.springframework.context.support.ClassPathXmlApplicationContext@62043840: startup date [Tue Jul 25 14:21:49 JST 2017]; root of context hierarchy

(.. omitted)

[2017/07/25 14:21:52] [main] [o.s.b.c.l.s.SimpleJobLauncher] [INFO ] Job: [FlowJob: [name=job01]] launched with the following parameters: [{jsr_batch_run_id=1}]

[2017/07/25 14:21:52] [main] [o.s.b.c.j.SimpleStepHandler] [INFO ] Executing step: [job01.step01]

[2017/07/25 14:21:52] [main] [o.s.b.c.l.s.SimpleJobLauncher] [INFO ] Job: [FlowJob: [name=job01]] completed with the following parameters: [{jsr_batch_run_id=1}] and the following status: [COMPLETED]

[2017/07/25 14:21:52] [main] [o.s.c.s.ClassPathXmlApplicationContext] [INFO ] Closing org.springframework.context.support.ClassPathXmlApplicationContext@62043840: startup date [Tue Jul 25 14:21:49 JST 2017]; root of context hierarchy3.1.3. Project structure

Project structure that was created above, is explained. Project structure should be made by considering the following points.

-

Implement the job which does not depend on the startup method

-

Save the efforts of performing various settings such as Spring Batch, MyBatis

-

Make the environment dependent switching easy

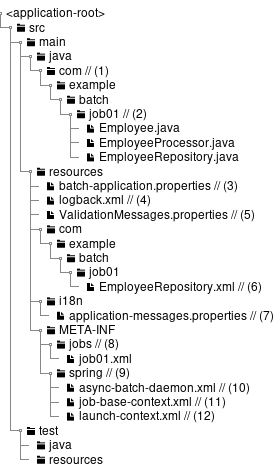

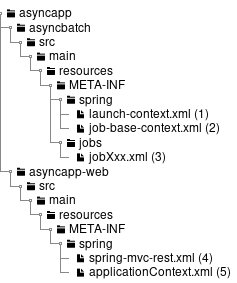

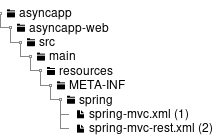

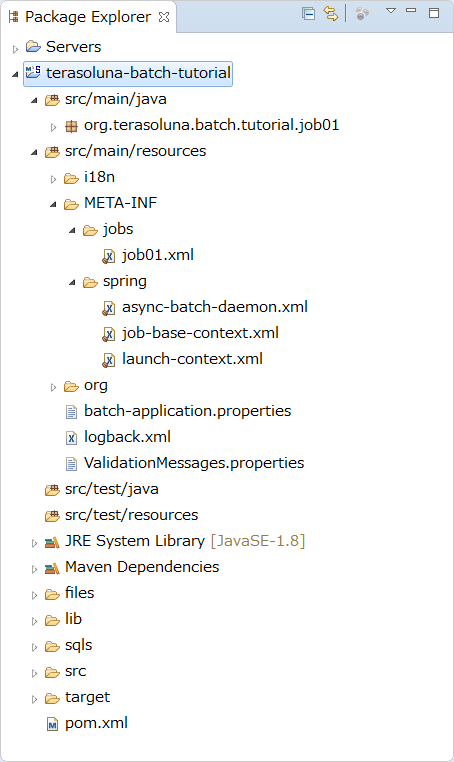

The structure is shown and each element is explained below.

(It is explained based on the output at the time of executing the above mvn archetype:generate to easily understand.)

| Sr. No. | Explanation |

|---|---|

(1) |

root package that stores various classes of the entire batch application. |

(2) |

Package that stores various classes related to job01. You can customize it with reference to default state, however, consider making it easier to judge the resources specific to the job. |

(3) |

Configuration file of the entire batch application. |

(4) |

Configuration file of Logback(log output). |

(5) |

Configuration file that defines messages to be displayed when an error occurs during the input check using BeanValidation. |

(6) |

Mapper XML file that pairs with Mapper interface of MyBatis3. |

(7) |

Property file that defines messages used mainly for log output. |

(8) |

Directory that stores job-specific Bean definition file. |

(9) |

Directory that stores Bean definition file related to the entire batch application. |

(10) |

Bean definition file that describes settings related to asynchronous execution (DB polling) function. |

(11) |

Bean definition file to reduce various settings by importing in a job-specific Bean definition file. |

(12) |

Bean definition file for setting Spring Batch behavior and common jobs. |

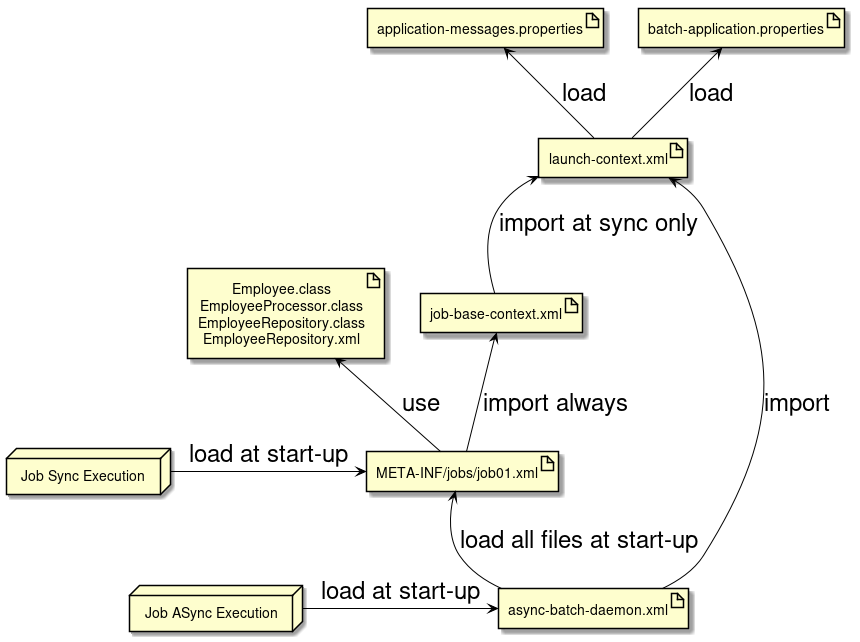

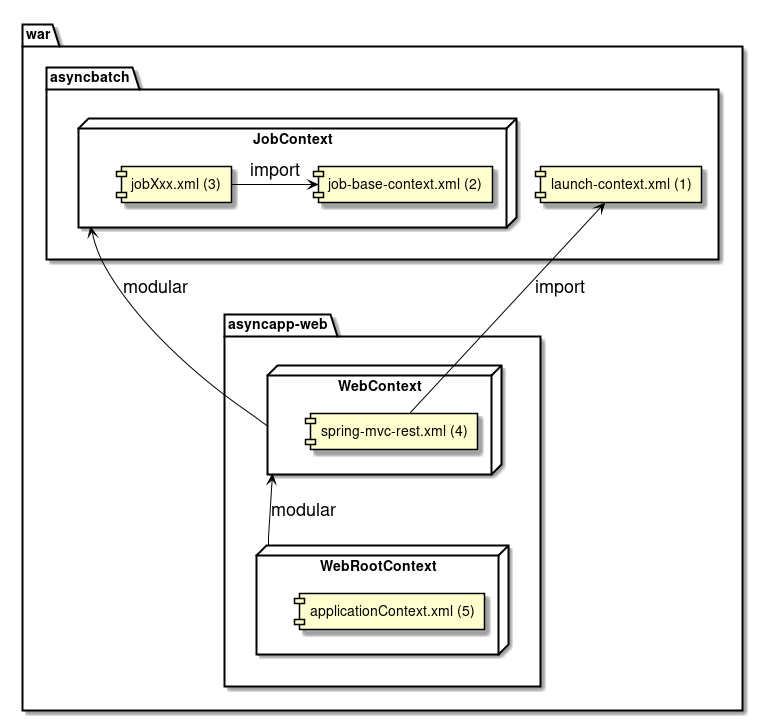

Relation figure of each file is shown below.

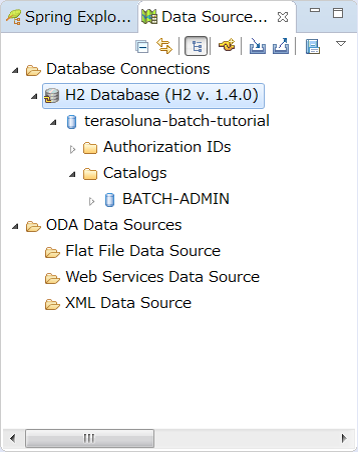

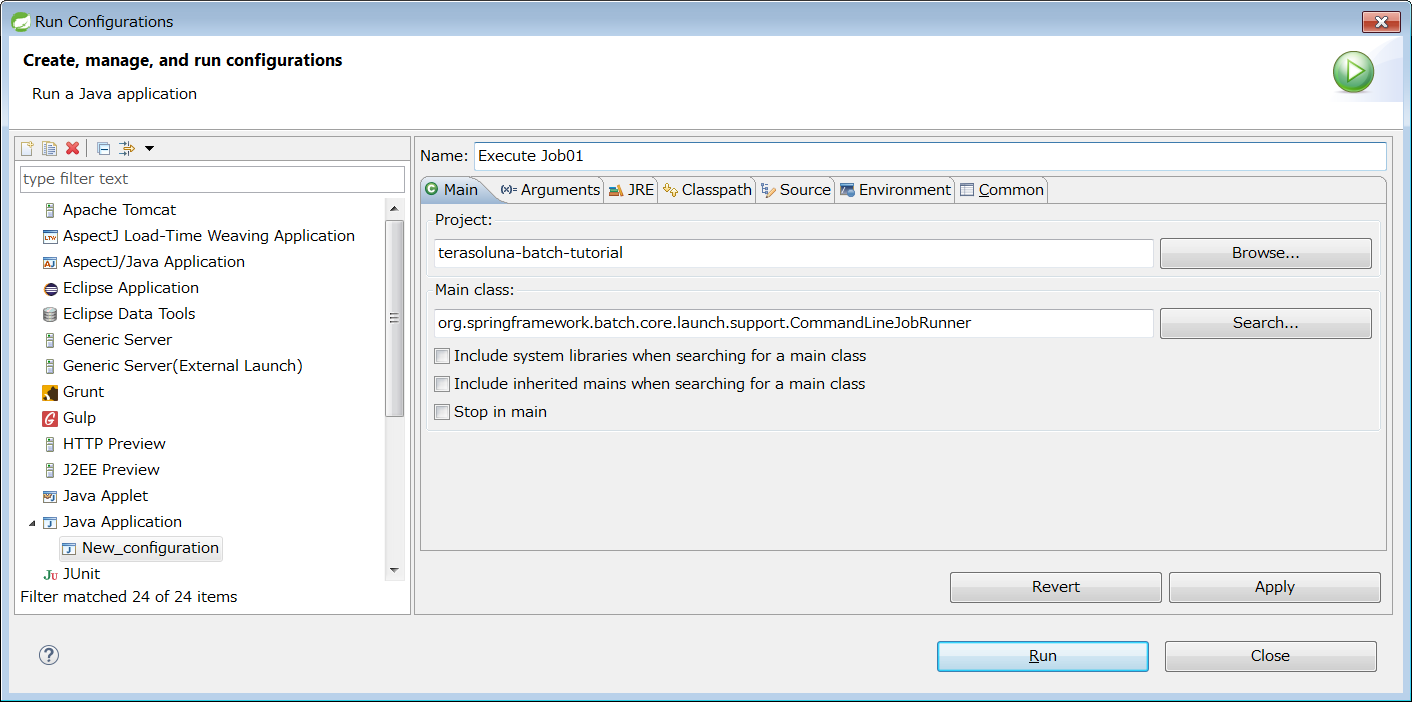

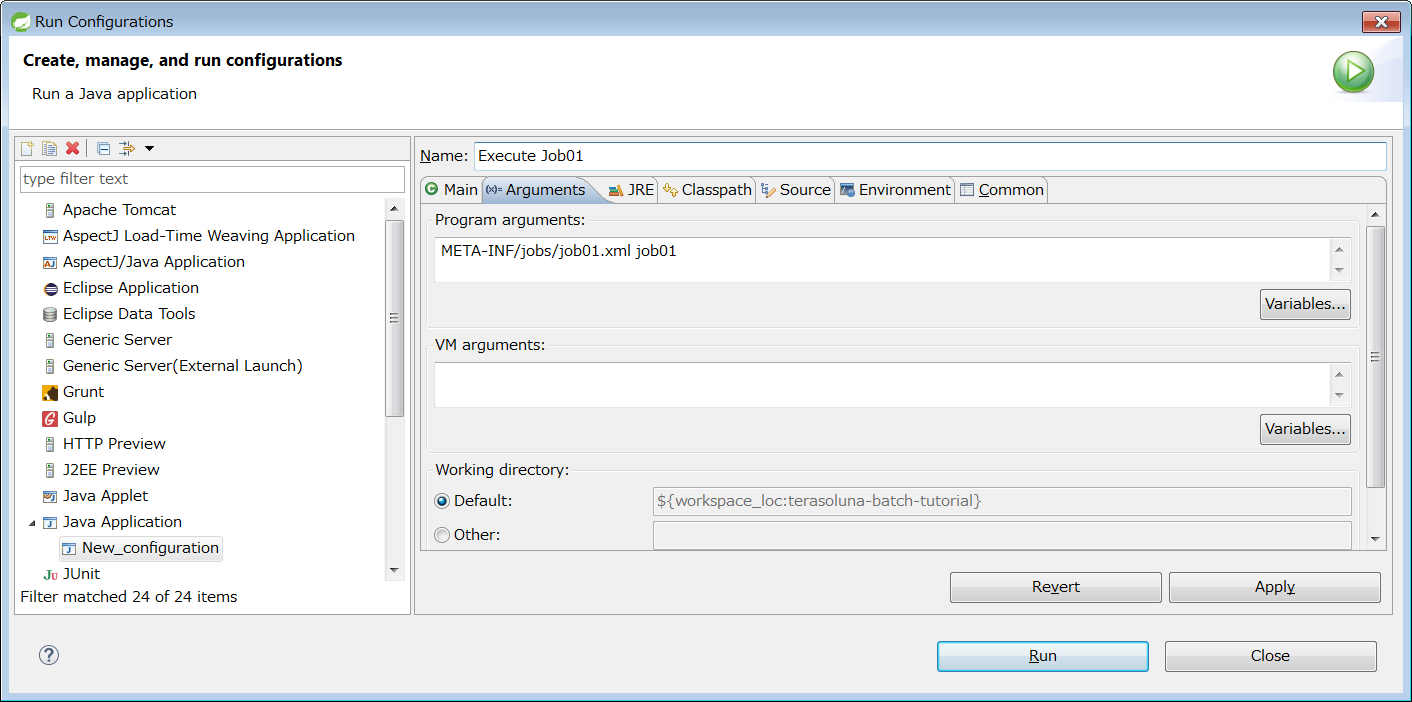

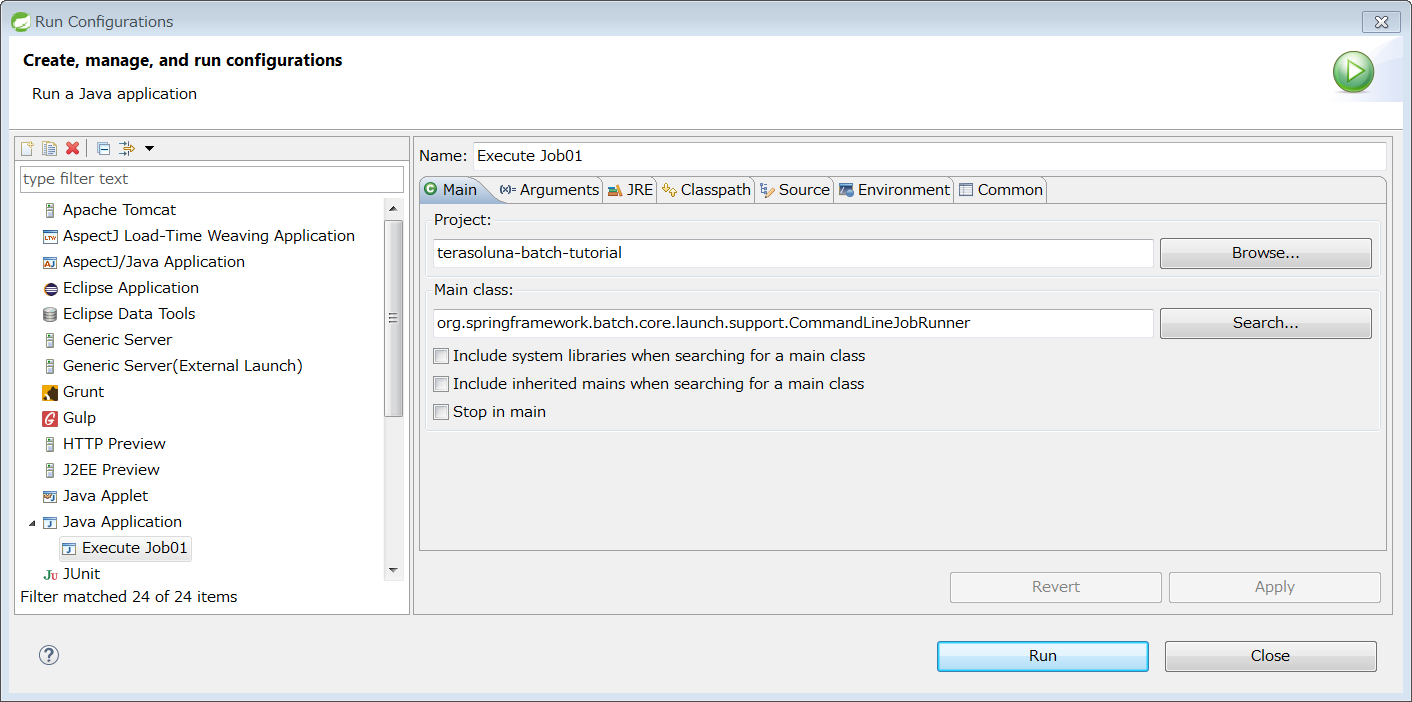

3.1.4. Flow of development

Explain the flow of developing jobs.

Here, we will focus on understanding the general flow and not the detailed explanation.

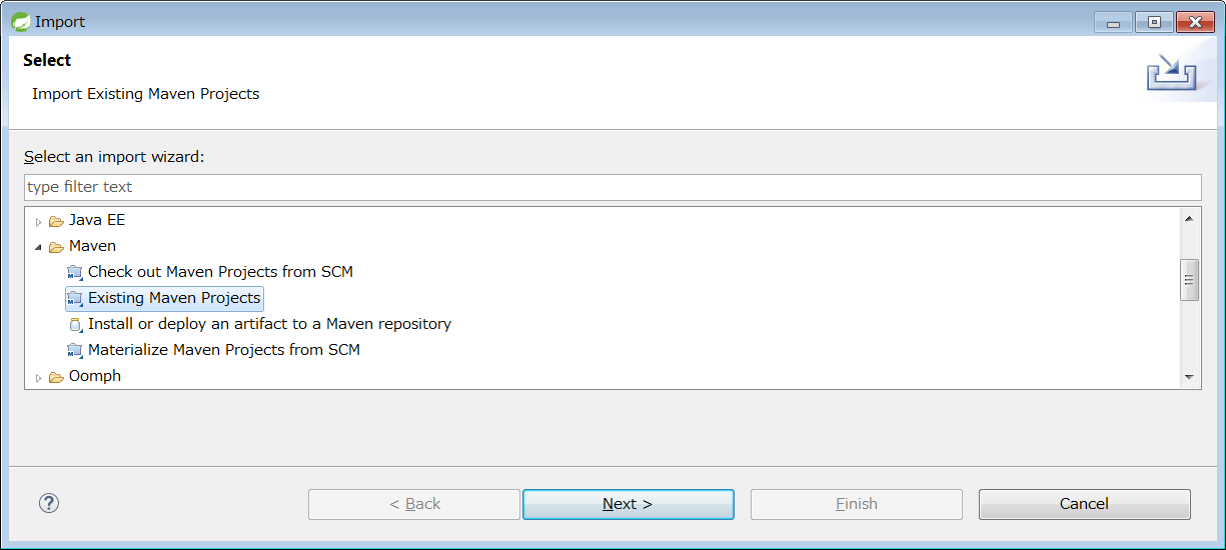

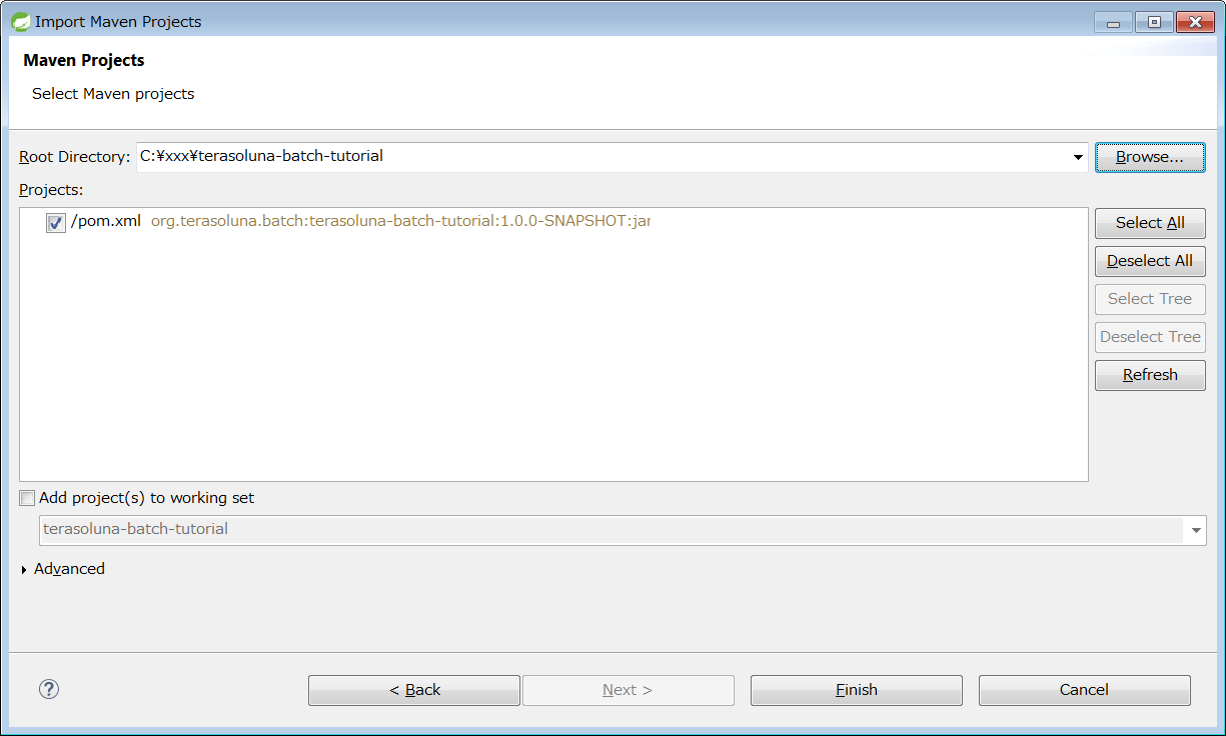

3.1.4.1. Import to IDE

Since the generated project is as per the project structure of Maven,

import as Maven project using various IDEs.

Detailed procedures are omitted.

3.1.4.2. Setting of entire application

Customize as follows depending on user status.

How to customize settings other than these by individual functions is explained.

3.1.4.2.1. Project information of pom.xml

As the following information is set with temporary values in the POM of the project, values should be set as per the status.

-

Project name(name element)

-

Project description(description element)

-

Project URL(url element)

-

Project inception year(inceptionYear element)

-

Project license(licenses element)

-

Project organization(organization element)

3.1.4.2.2. Database related settings

Database related settings are at many places, so each place should be modified.

<!-- (1) -->

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

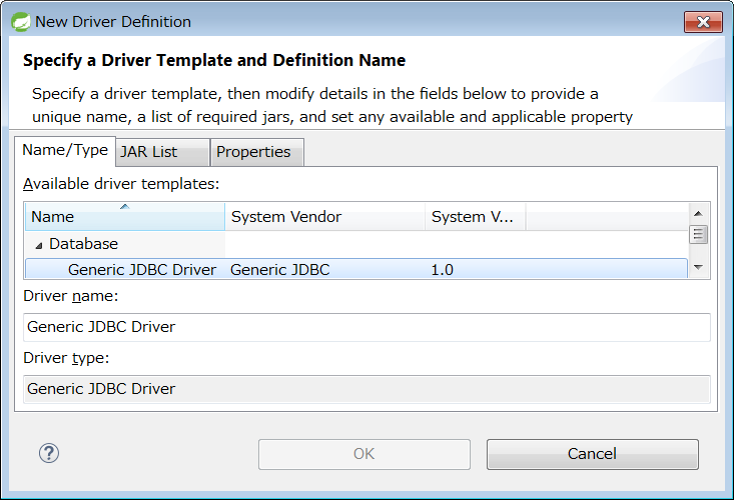

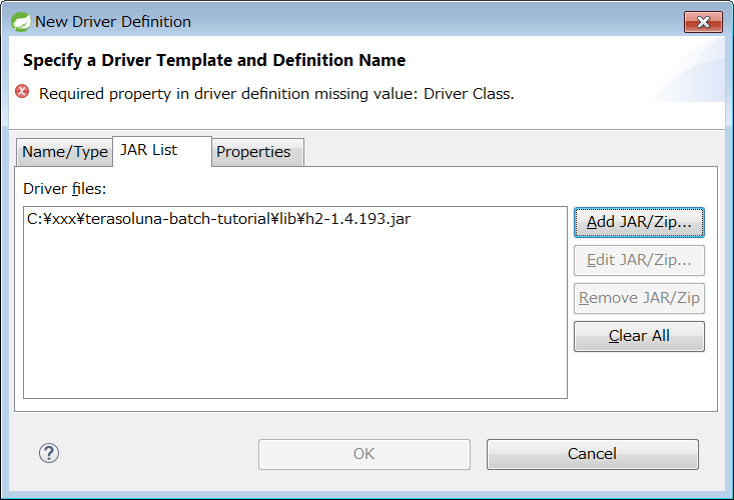

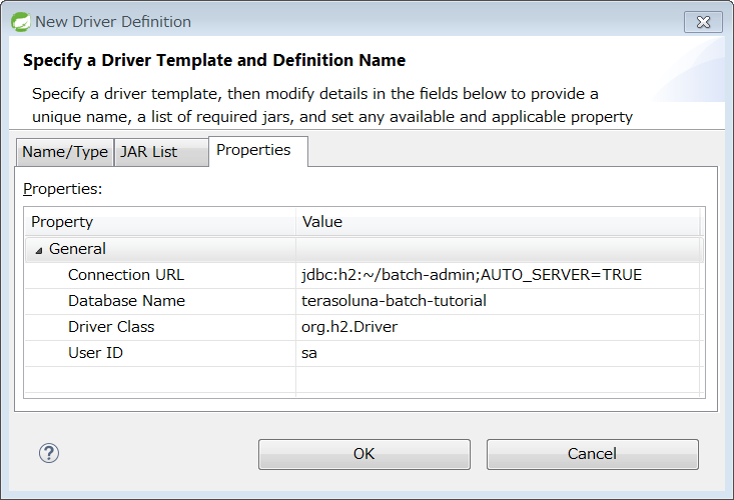

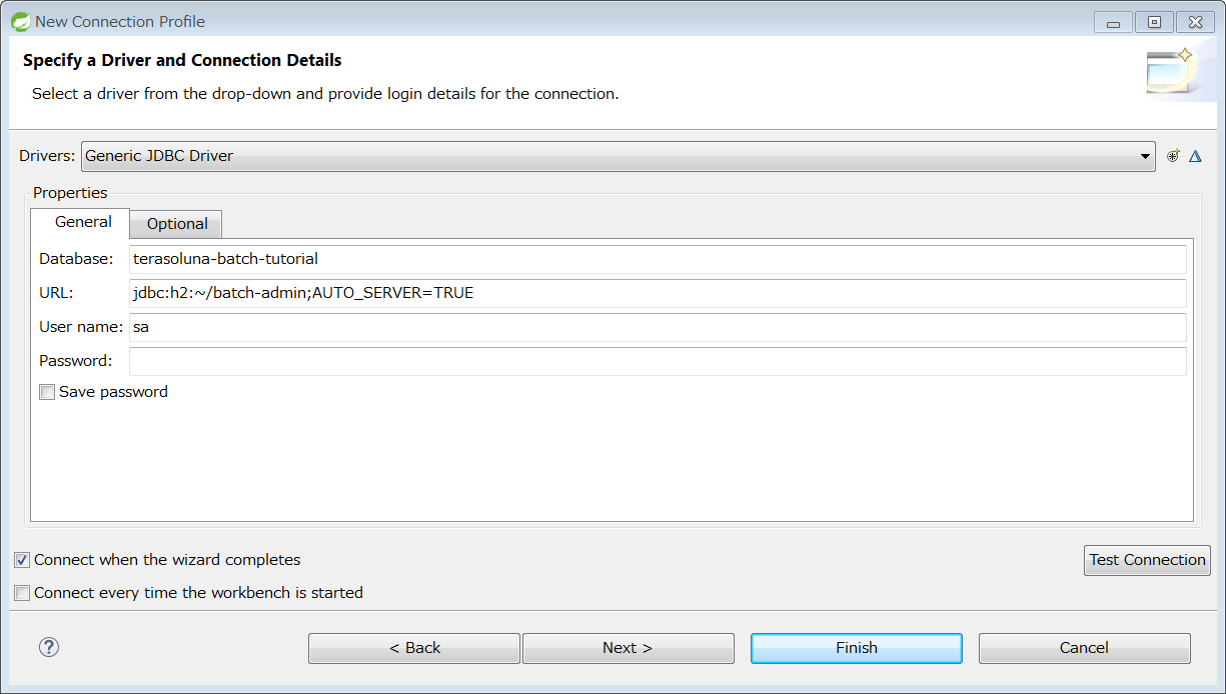

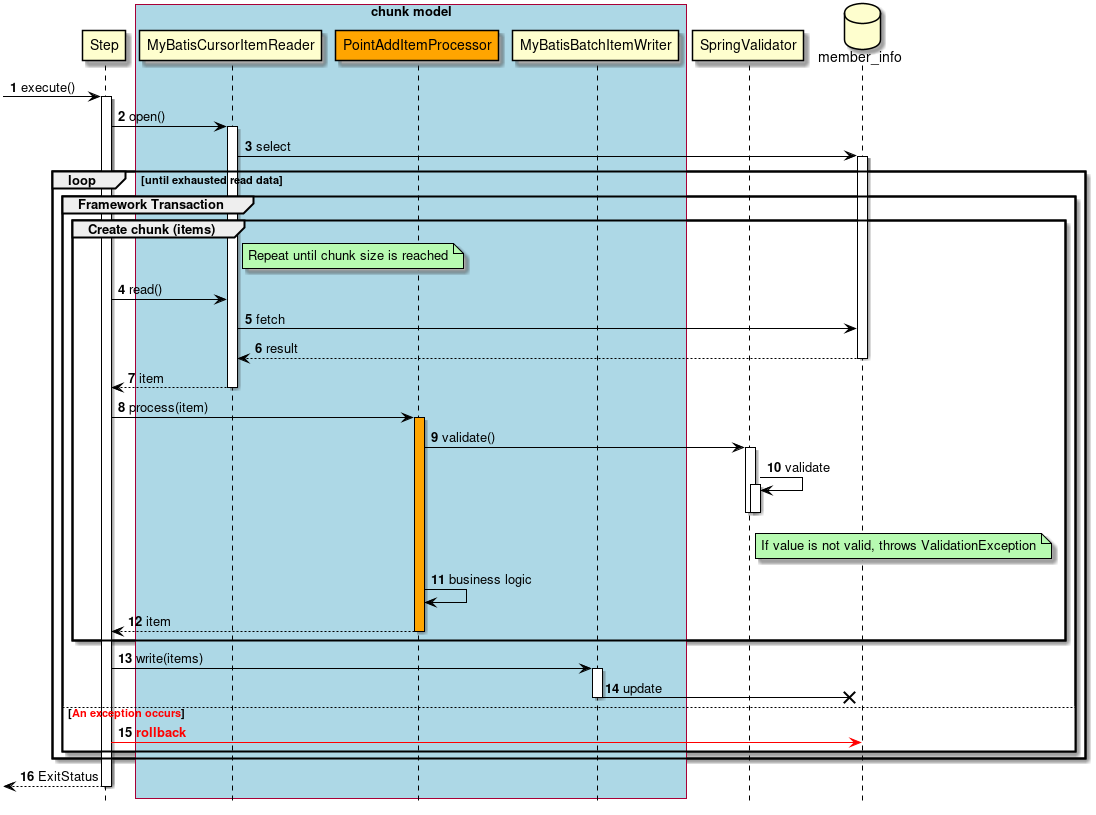

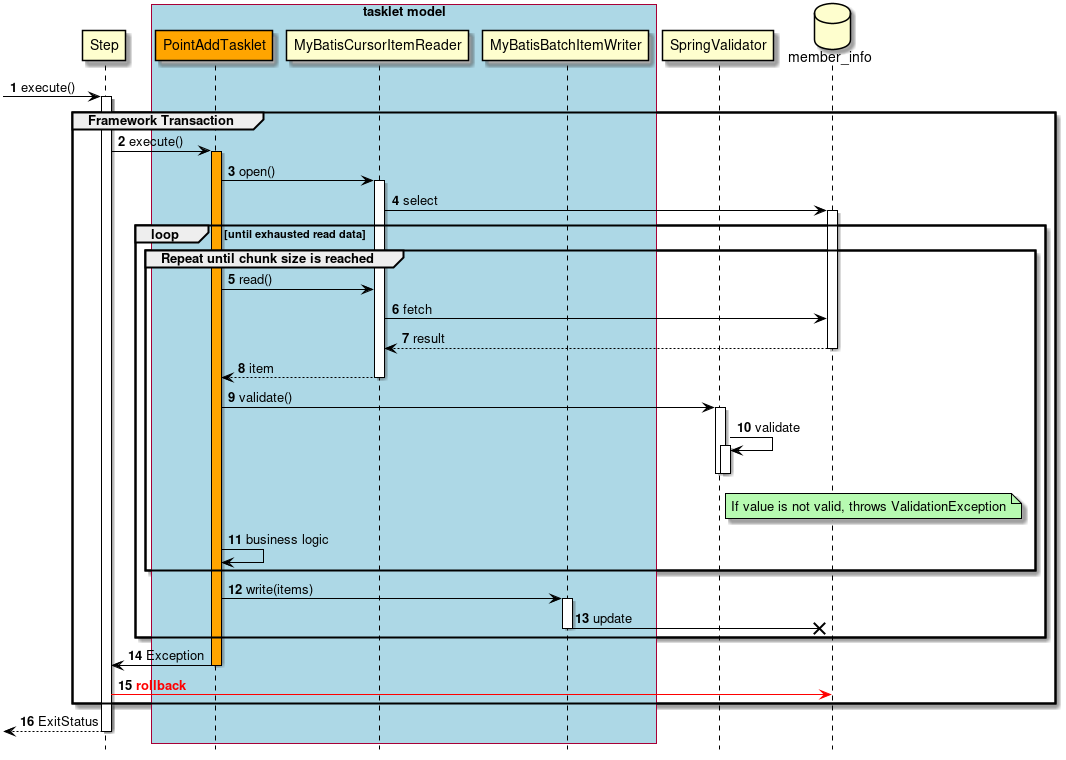

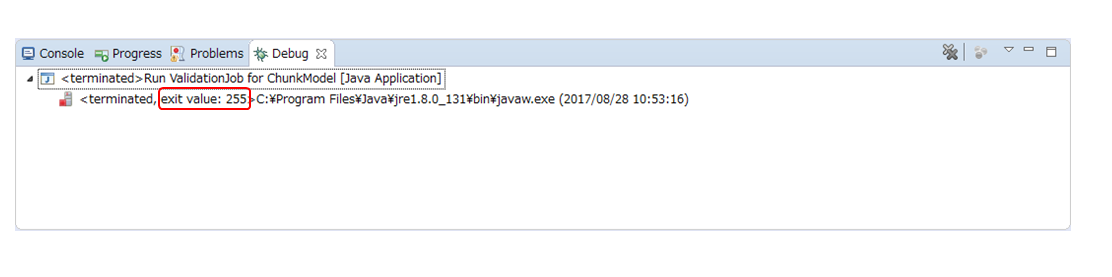

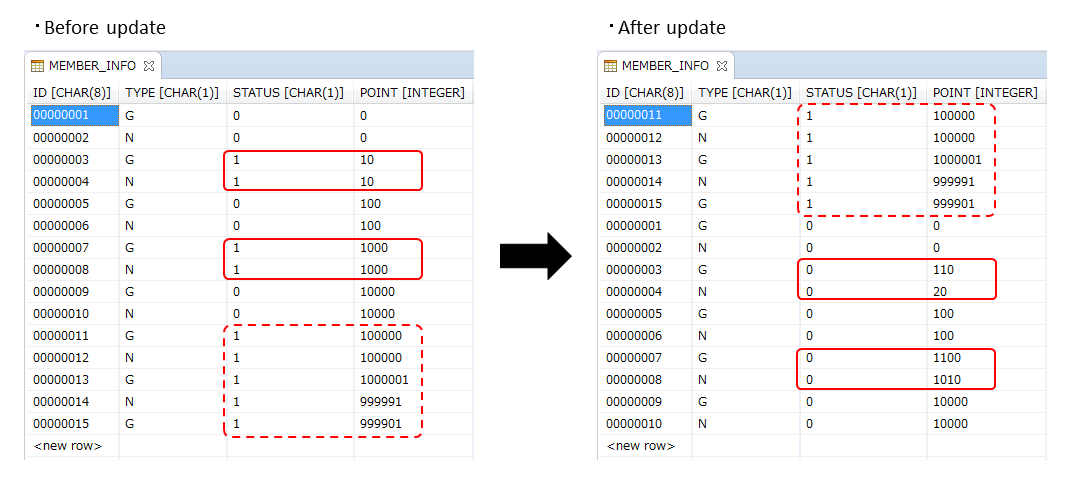

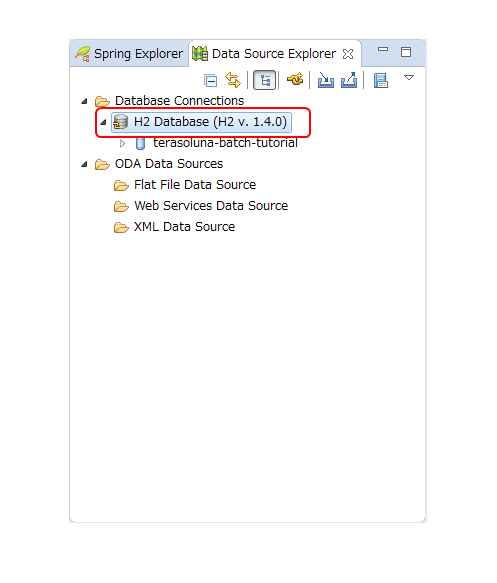

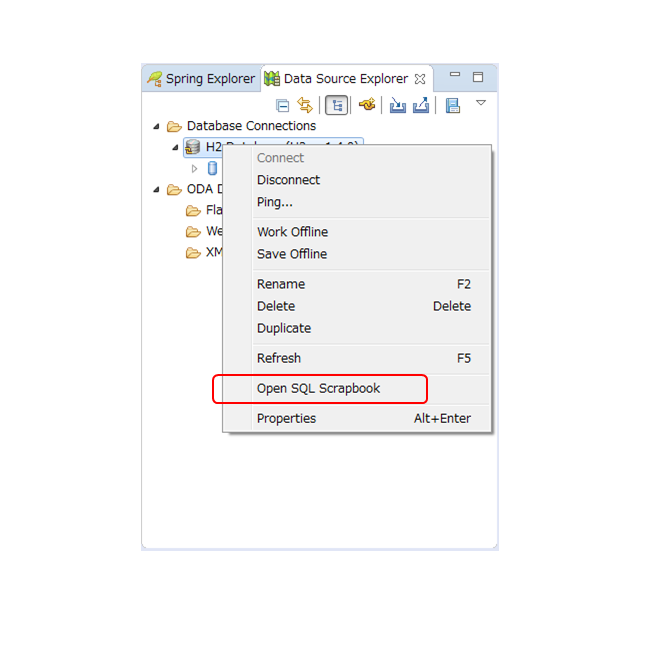

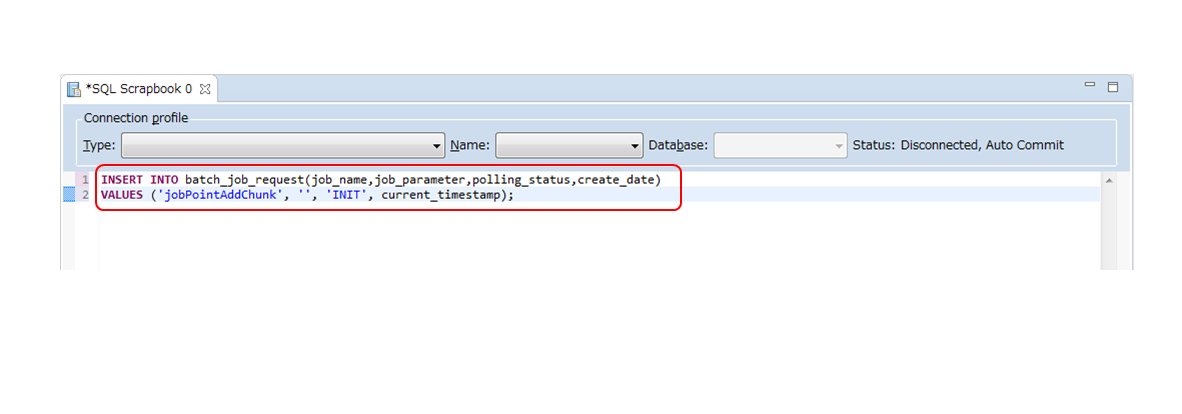

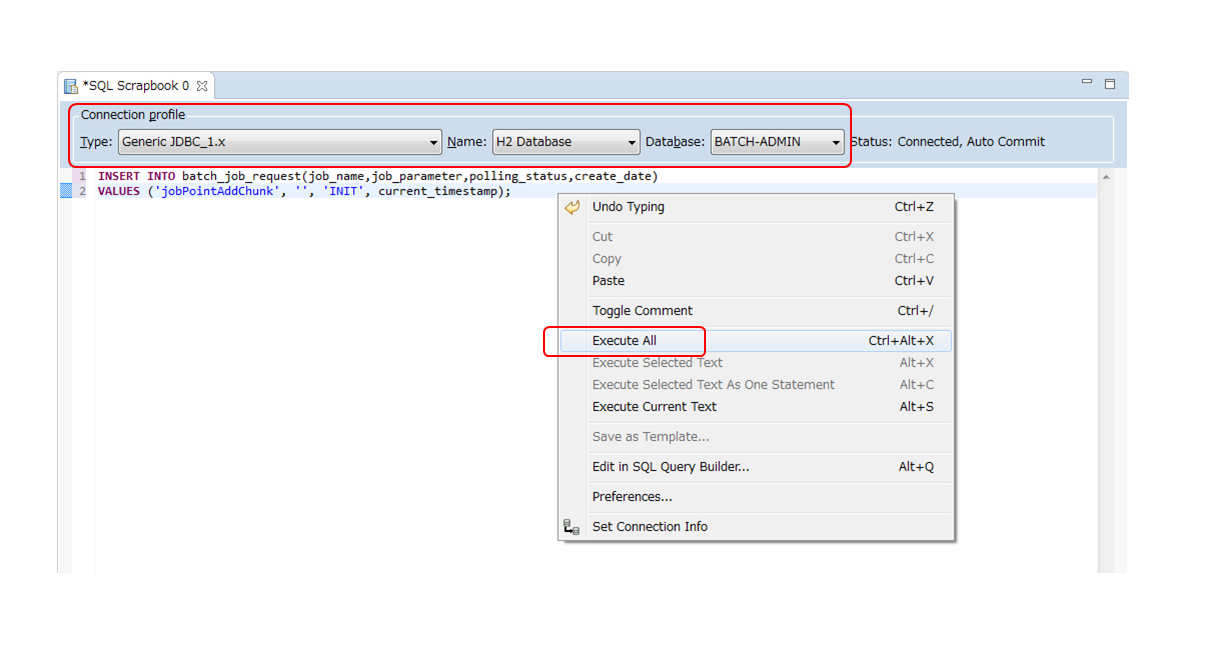

<scope>runtime</scope>